I don't know if it is a good question or a well-defined question, but I really need some suggestions! Any suggestion is valuable for me! Very much thanks! The question is:

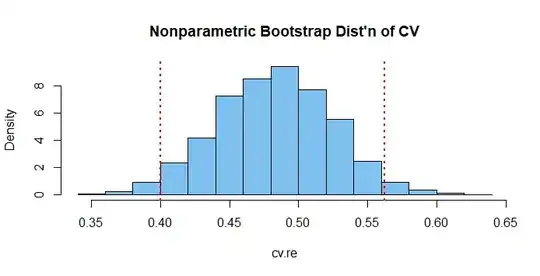

There are many attributions of a research subject, which can be quantified as variables such as X, Y, Z, and they may not necessarily have the same units. Every variable has its unique distribution of course. Now I want to know which variable is more consistent or invariant for the research subject.

I've considered several methods to do that, for example:

(1) Randomly choose m values and repeat it n times(bootstrap), then I get n sets of sampling data. Since every set of sampling data has a distribution, calculate the pairwise distances between the n distributions. The fewer the distances are, the more invariant the variable is.

(2) Train an LDA classifier to classify the subclass of my research subject with a variable. The worse a variable shows to be in classification, the more invariant the variable is.

I believe there must be some very elegant method in statistics however I'm poor in statistics so I really need help!!!