Given the issues of normality testing (Is normality testing 'essentially useless'?), in particular for large sample sizes, I was wondering if there are any feasible "approximate" normality tests.

Typically, in Null Hypothesis testing, we test a hypothesis of the form $X = Y$, i.e. one tests if, given all the observed data, is there any credence that two distributions differ.

$$ (1) \qquad \boxed{H_0\colon X=Y} $$

This has the effect that in practice tests for normality almost always fail for large sample sizes. So, instead, I am interested in testing a Null Hypothesis of the form

$$ (2) \qquad \boxed{H_0\colon d(X, Y) < ε}$$

where $d$ is a suitable statistical divergence such as the Kullback–Leibler divergence or Wasserstein metric.

In particular, I am interested in automatically testing whether a large sample $X = (x_i)_{i=1:N}$ in $\mathbb R^d$ comes from a distribution close to a standard normal distribution. Here, the allowed closeness is defined by the design parameter $ε$.For example, we may need this to take into account numerical errors and other noise (PRNGs not producing perfect normal number etc).

Question: What are some numerically feasible test I could use?

Background: Creating a unit test for neural network architectures at initialization.

There is good empirical and theoretical evidence, that, in order to have stable training for deep neural networks, it is necessary that these networks satisfy a normalization condition at initialization; that is, we want that if $x∼(0,1)$ then $f(x, θ_0)∼(0,1)$, and this should hold layer- or block-wise. My goal is to build an automated probabilistic unit-test, that takes as input some neural network architecture and tests whether this condition holds approximately. Towards this goal I want to implement a function

def test_approx_normal(x: list[float], tol: float) -> bool:

that returns False if and only if the Null Hypothesis, i.e. that the sample comes from some distribution that is close to a standard normal distribution, is rejected, and True otherwise.

The standard Kolmogorov–Smirnov test does not seem suitable for this task, at least the following example shows $p$-values all over the place.

import numpy as np

from scipy.stats import kstest, norm as normal

from scipy.stats import norm as normal

for n in (10, 100, 1000, 10_000):

x = normal.rvs(size=(n,))

A = normal.rvs(size=(n, n)) / np.sqrt(n)

y = A @ x

print(n, kstest(x, normal.cdf), kstest(y, normal.cdf), sep="\n")

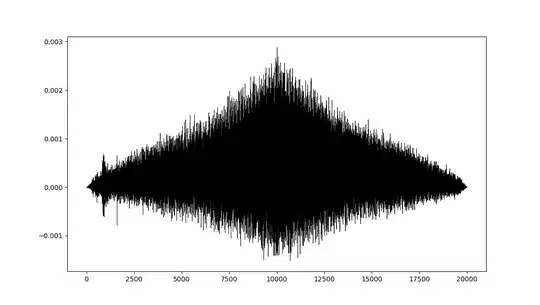

This is not surprising because this test is not "numerically robust" as it tests for equality between distributions (1) instead of approximate equality (2). However, we clearly see that the distribution follows a standard normal approximately: