I am training a deep neural network using tensorflow in python. I have some inputs and outputs and I want to train my NN for regression problem. I created my NN (I will explain it later), but I have a problem and that is after some epochs, error becomes almost constant and NN seems does not learn anymore. I've tried many solutions such as:

1 - increasing/decreasing number of layers

2 - increasing/decreasing number of neurons

3 - different optimizers

4 - different learning rates

5 - different activation functions

6 - normalizing inputs and outputs

7 - increasing number of epochs

and …. but I couldn't solve the issue. I will be appreciated if anyone can help me with this issue.

here is my python code:

import pandas as pd

import tensorflow as tf

from tensorflow import keras

from sklearn.preprocessing import MinMaxScaler

import matplotlib.pyplot as plt

import numpy as np

input = pd.read_csv("./Data/All_Inputs.txt", delimiter = ",").to_numpy()

output = pd.read_csv("./Data/All_Outputs.txt", delimiter = ",").to_numpy()

x_train = input[0:48000, :]

y_train = output[0:48000, 12:1012]

x_test = input[48000:60000, :]

y_test = output[48000:60000, 12:1012]

x_valid = input[60000:72000, :]

y_valid = output[60000:72000, 12:1012]

scalerInput = MinMaxScaler()

x_train = scalerInput.fit_transform(x_train)

x_test = scalerInput.transform(x_test)

x_valid = scalerInput.transform(x_valid)

scalerOutput = MinMaxScaler()

y_train = scalerOutput.fit_transform(y_train)

y_test = scalerOutput.transform(y_test)

y_valid = scalerOutput.transform(y_valid)

model = keras.models.Sequential()

model.add(keras.layers.Dense(800, activation = "linear", input_shape = x_train.shape[1:]))

model.add(keras.layers.Dense(900, activation = "linear"))

model.add(keras.layers.Dense(900, activation = "linear"))

model.add(keras.layers.Dense(1000, activation = "linear"))

opt = keras.optimizers.SGD(learning_rate = 0.01, momentum = 0.9)

model.compile(loss = "mse", optimizer = opt, metrics=['mae'])

history = model.fit(x_train, y_train, epochs = 20, validation_data=(x_valid, y_valid))

mse_test = model.evaluate(x_test, y_test)

print("\n\nTest set mean squared error is = ", mse_test)

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.show()

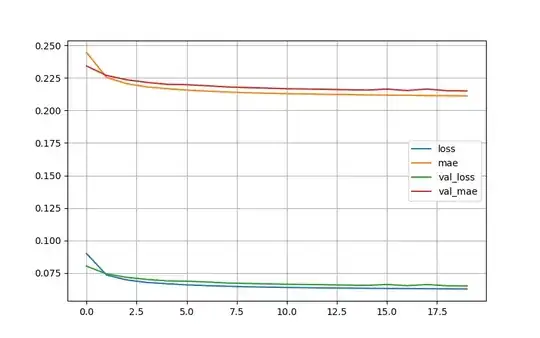

and this is the history of training.

I also tried more epochs like 100 or 300 but the result was not so different and history showed similar nearly flat behavior of curves.

how can I reduce the error of training furthermore?