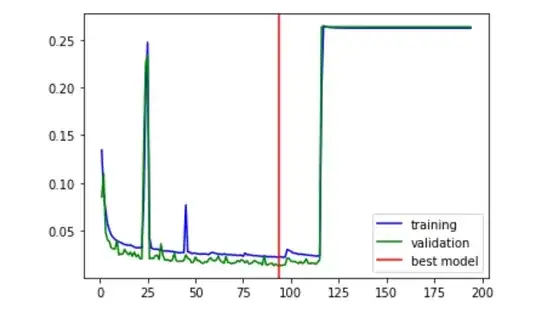

I'm struggling with my model (below), since despite some hyperparameters tuning i always end with a sudden rise of the loss function and then a 'infinite" plateau.

My hypothesis were: -learning rate and local minima issue? i tried several (1e-3,1e-4) -Optimizer issue? i tried SGD for example -Too complex model? i removed some layers or neurons -Metric issue? i tried MAE

Among theses hypothesis and perhaps others, which one seems the most likely ? I looked for differents curve patterns but didn't find this one.

timeseries = Input(shape=(1536,2), name='timeseries') #1536 time steps, and 2 features

features = Input(shape=(22,), name='features') #22 features

y=LSTM(256)(timeseries) #256

y=Dropout(0.20)(y)

y=Flatten()(y)

x=Dense(28, activation='relu')(features) #14

x=Dense(14, activation='relu')(x) #14

x=Dense(14, activation='relu')(x) #7

#z = concatenate([x, y])

z=Concatenate(axis=1)([x,y])

z=Dense(64, activation='relu')(z) #64

z=Dense(64, activation='relu')(z) #64

x=Dense(32, activation='relu')(x) #32

x=Dense(16, activation='relu')(x) #16

z=Dropout(0.40)(z)

outputP = Dense(1, activation='softplus')(z)

outputR = Dense(1, activation='softplus')(z)

outputC = Dense(1, activation='softplus')(z)

modelLSTM256NN = Model(inputs=[timeseries,features], outputs= [outputP,outputR,outputC])

opt = Adam(lr=1e-3) #1e-3

modelLSTM256NN.compile(optimizer=opt, loss='mse')

Thanks!!