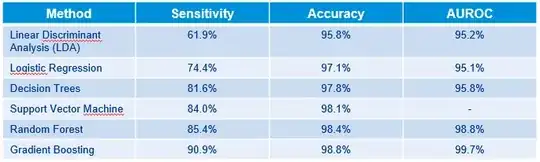

The algorithms have been applied to a dataset where an outcome is pretty rare, it happens 10% of the times (binary, 0- 90%, 1-10%). It is the response whether a client is going to default or not.

I struggle to believe its values. We see an increase in sensitivity, which makes totally sense to me and the accuracy being constant high (since the outcome is rare) throughout the algorithms.

But shouldn't the AUROC be correlated to the sensitivity, since it takes the hit and false alarm rate? What would we expect for the accuracy ratio (Gini)?

In credit risk, AUROC is one of the most important benchmarks. I try to figure out why. Looking at this table sensitivity would make much more sense to me.