I am trying to build a Stock Trend Prediction model (Target variable is 1 if the price goes up the next day and 0 if it goes down). The dataset consists 2724 samples and 60 features. Th data was split as 80% training and 20% test, with the target variable being balanced in both the training and test sets.

I tried predicting with some SVMs and Random Forests, and the best performing model had the scores as follows:

Precision = 0.5305676855895196

Recall = 0.8678571428571429

Accuracy = 0.5367647058823529

Negative Precision = 0.5697674418604651

Specificity = 0.1856060606060606

[[ 49 215]

[ 37 243]]. This is the confusion matrix, This performance was achieved with an RBF kernel SVM with C = 100 and gamma = 1

I would also like to add as a part of this edit, performances of sigmoid kernel SVMs.

Sigmoid kernel, C = 1

[[120 144]

[134 147]]

Sigmoid kernel, C = 10

[[123 141]

[134 147]]

Sigmoid kernel , C = 100

[[123 141]

[135 146]]

The reason why I put these confusion matrices here is to show that sigmoid kernel is at least trying to classify samples as belonging to class 0.

This is really bad for a balanced dataset. This is just one part of the problem though. The models (SVMs and RFs) are not just performing poorly but also not learning anything. As you can see from the Recall and Specificity scores, the models are classifying a lot of cases as 1 and a very small amount as 0.

What could be the reason/s behind this? Since the dataset is balanced, I don't understand why the models are not learning at least. There are 2180 training samples. Out of which 1119 are up days (51.33%) and 1061 are down days (48.66%). The test samples are a total of 545 samples. Out of which 294 are up days (53.94%) and 251 are down days (46.05%). I think this is quite balanced for a real world scenario.

I tried out different kernels, various values for C and gamma for the SVMs, and tried varying the n_estimators for the RFs. But I see the same results: Poor accuracy and classification of majority of the samples as class 1.

Am I right to arrive at either or both of the following conclusions?

- This is the best that a simple model can perform

- The problem lies in the dataset and not in the model

As Dave pointed it out in the comments, the reason why I think this performance can be improved a lot (not necessarily bad performance) is because I came across a few papers wherein people have achieved over 65% results in one day ahead trend prediction.

Now their models were complex (SVM with quasi linear kernel, SVM with GA for feature selection, MLP with GA for feature selection). But I wonder how much of a performance difference should these complexities make.

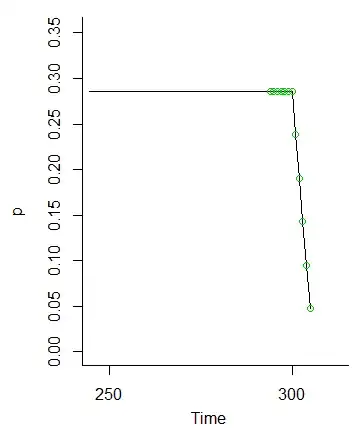

As nxglogic suggested in the comments following his answer, I tried swapping the labels i.e. up days are now 0s and down days are now 1s. Now the model is classifying majority of samples as 0s, whereas before it classifying majority of samples as 1s.

I do not understand why this must be happening.

If this has to do something with the feature engineering part and requires domain knowledge then there can always be improvements. What I would like to know is whether I am failing to experiment properly using these baseline models.