I am trying to model claim counts over for a given region. The data is very sparse. I am using the BESAG model from R INLA package. I am having a tough time to model the data. I am able to reproduce my issue (to some extent) by some synthetic data generated as follows:

- Create a grid of 50x50 cells.

- Use a variogram to simulate some spatial effect (copied the R snippet from here)

- Create a artificial population residing in one of the 2500 cells.

- For each individual create a Poisson count response with mean = exponential of simulated spatial effect. The mean is artificially kept low so that I can have sparse data.

- To this synthetic data, fit Besag model (CAR model) from INLA and GAM.

- Plot the estimates on the map.

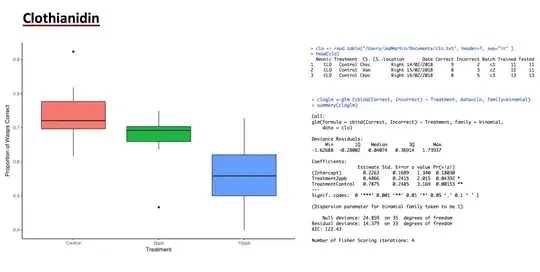

Here is my issue, as captured in the image below, the BESAG model is really showing estimates to be near 1. GAM works somewhat ok but it seems to over smooth the results. I can confirm that BESAG and GAM works well when I have plenty of data. My question is that are there techniques that I can use to handle the sparsity in my data? I have also provided the R script below (its a messy and tedious but should yield the same charts as shown in the post here).

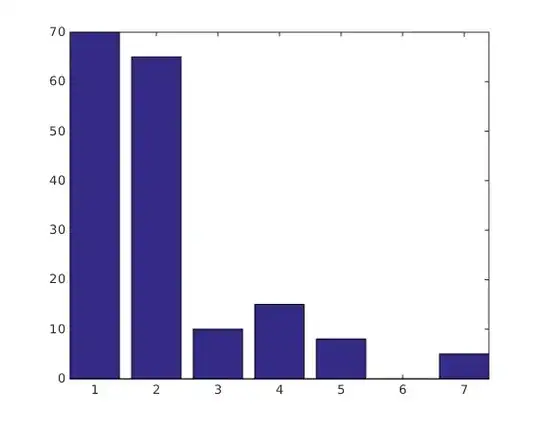

I also want to say that I had tried another approach where in I redefined the 50x50 grid into a voronoi diagram with cell centroids having > 0 counts as the midpoints. I then reassigned the population to that new shapefile and fitted both GAM and BESAG models to this new spatial dataset. That did not work either. Here is how the revised shapefile looks like:

(apologies in advance for messy and tedious script):

Load in the packages and some functions

library(raster)

library(rgdal)

library(rgeos)

library(sp)

library(spdep)

library(nimble)

library(scales)

library(ggmap)

library(viridisLite)

library(viridis)

library(MASS)

library(condMVNorm)

library(gstat)

library("gplots")

library(data.table)

library(lubridate)

library(splitstackshape)

library(INLA)

library(mgcv)

library(gridExtra)

memory.limit(3200000000000)

# Grid size n_grid x n_grid

n_grid = 50

# Number of regions to simulate

n_sim <- 4

# Synthetic data size

size_pop = 400000

#used for variogram simulation

psill <- 0.15

sample_spatial_effect <- function(n_grid,psill, seed){

xy <- expand.grid(1:n_grid, 1:n_grid)

names(xy) <- c("x","y")

# define the gstat object (spatial model)

g.dummy <- gstat(formula=z~1, locations=~x+y, dummy=T,

beta=1, model=vgm(psill=0.15,model="Exp",range=25), nmax=20)

set.seed(seed)

# make four simulations based on the stat object

yy <- predict(g.dummy, newdata=xy, nsim=4)

gridded(yy) = ~x+y

return(yy)

}

plot_spatial_effect <- function(p,attribute, title){

# p@data$id = row.names(p_1@data)

newp <- fortify(p, region = "id")

newdf_1 <- merge(newp, p@data, by = "id")

Myplot <- ggplot() +

geom_polygon(data = newdf_1, aes_string(fill = attribute,

x = "long",

y = "lat",

group = "group")) +

theme_minimal() + coord_map() + ggtitle(title) + theme(plot.title = element_text(hjust =0.5))

NicerPlot <- Myplot + scale_fill_viridis(option = "magma", direction = -1)

return(NicerPlot)

}

Simulate four maps and I decided to choose simulation 2 from the results below

The code for simulating effects is as below

# Simulate some spatial effect over a grid of 50x50

yy <- sample_spatial_effect(n_grid,psill, 800000)

yy_exp = yy

yy_exp@data = exp(yy_exp@data)

# show all four simulations but choose simulation 2 for generating data

spplot(yy_exp)

# Convert to spdf, create id (used later for joins)

p_1 <- as(yy, "SpatialPolygonsDataFrame")

p_1@data$id = row.names(p_1@data)

# Append simulated spatial effects

newp <- fortify(p_1, region = "id")

newdf_1 <- merge(newp, p_1@data, by = "id")

Now create synthetic data

####################################################################

# Select simulation 2

sel_eff = "sim2"

spatial_effects <- as.data.table(unique(newdf_1[, c("id",sel_eff)]))

setnames(spatial_effects, old = sel_eff, new = "spatial_eff")

# Generate a sample population

sample_pop <- {}

sample_pop <- data.table("id" = sample(n_grid*n_grid, size = size_pop, replace = TRUE))

sample_pop[, c("id"):= list(paste0("g",id)),]

# Append spatial effect to each individual in the population

setkey(spatial_effects,id)

setkey(sample_pop, id)

sample_pop <- spatial_effects[sample_pop]

# Create a unique key for each record

sample_pop[, c("globalid") := list(seq_len(.N))]

# Define mean of poisson count variable as below

sample_pop[, c("mean_pois") := list(exp(spatial_eff))]

# Define and append centroids of the regions to the sampled data, will be used in GAM regression

centroids <- (sp::coordinates(p_1))

id_centroids <- row.names(centroids)

centroids <- as.data.table(centroids)

centroids$id <- id_centroids

setkey(sample_pop, id)

setkey(centroids, id)

sample_pop <- centroids[, list(id, x_coord = V1, y_coord = V2)][sample_pop]

sample_pop$weights <- 1

# Simulate some data so that overall number of counts/number of records are equal to

# the defined frequency specified by .0005

avg_freq <- mean(sample_pop$mean_pois)

req_freq = 0.002

intercept <- log(req_freq/avg_freq)

sample_pop[, c("mean_pois") := list(exp(intercept + spatial_eff))]

set.seed(3)

sample_pop$sim_count <- rpois(nrow(sample_pop), sample_pop$mean_pois)

# Compute simulated freq

sim_freq = sum(sample_pop$sim_count)/sum(sample_pop$weights)

Fit a GAM on the dataset with gaussian process smoother

# Fit a gam with x and y coordinates, use GP smoother

gam_model_latlon <- mgcv::bam(sim_count ~ s(x_coord, y_coord, bs = "gp", m = 3, k = 100)

,data = sample_pop, family=poisson(link=log),

weights = weights,discrete = TRUE)

Make GAM predictions to get the spatial effect

# Compute predictions from GAM (used for pl)

# Idea is to list out the unique x y coordinates for the cells in the grid

dummy_data <- expandRows(sample_pop[1,], count = nrow(centroids), count.is.col = FALSE)

dummy_data[, c("id", "x_coord", "y_coord") := list(centroids$id, centroids$V1, centroids$V2),]

dummy_data$gam_spatial_est <- exp(predict.gam(gam_model_latlon, newdata=dummy_data))

summ_code <- sample_pop[, list(obs_counts = sum(sim_count), fitted_counts = .N*sim_freq,

weights = sum(weights)), keyby = list(id)][, c("res") := list(obs_counts/fitted_counts),]

# Append summarized data to the shape file

shape_fulldata <- merge(x = p_1, y = summ_code, by = "id",all.x = TRUE)

Now create the neighbourhood matrix and fit BESAG model using INLA

# Create a neighbourhood list

nb <- poly2nb(shape_fulldata)

listw <- nb2listw(nb, style="W", zero.policy=TRUE)

# Compute Moran's I

moran_org <- moran.test(shape_fulldata$res,listw, alternative="greater")

# Provide neighbourhood list in INLA format and run INLA

nb2INLA("map_test.adj", nb)

g <- inla.read.graph(filename = "map_test.adj")

shape_fulldata$idareau <- 1:nrow(shape_fulldata@data)

formula_besag <- obs_counts ~ f(idareau, model = "besag", graph = g, scale.model = TRUE)

res_besag <- inla(formula_besag,family = "poisson", data = shape_fulldata@data,

E = fitted_counts, control.predictor = list(compute = TRUE), verbose = FALSE)

# Select the INLA fitted effect

shape_fulldata@data$fitted_u <- res_besag$summary.fitted.values$mean

Combine the predictions from INLA and GAM. Plot them against the actual simulated effect

# Append fitted effect from GAM done earlier

dummy_data_sp <- dummy_data[, list(id, gam_spatial_est)]

shape_fulldata <- merge(x = shape_fulldata, y = as.data.frame(dummy_data_sp), by = "id", all.x = TRUE)

# Compute the weighted means and use it scale actual and individual model's estimate.

# It makes plotting a bit easier

shape_fulldata$sim_exp = exp(shape_fulldata@data[,sel_eff])

actual_mean <- weighted.mean(shape_fulldata$sim_exp, shape_fulldata$weights)

inla_mean <- weighted.mean(shape_fulldata$fitted_u, shape_fulldata$weights)

gam_mean <- weighted.mean(shape_fulldata$gam_spatial_est, shape_fulldata$weights)

#Scale actual and predicted values

shape_fulldata$fitted_u <- shape_fulldata$fitted_u/inla_mean

shape_fulldata$sim_exp <- shape_fulldata$sim_exp/actual_mean

shape_fulldata$gam_spatial_est <- shape_fulldata$gam_spatial_est/gam_mean

# Plot the cells with count > 0 along with fitted and actual effects

shape_fulldata@data$count_ind <- ifelse(shape_fulldata@data$obs_counts > 0, 1,0)

grid.arrange(plot_spatial_effect(shape_fulldata, "count_ind", "Cells with >0 counts"),

plot_spatial_effect(shape_fulldata, "fitted_u", "INLA Estimate"),

plot_spatial_effect(shape_fulldata, "gam_spatial_est", "GAM Estimate"),

plot_spatial_effect(shape_fulldata, "sim_exp", "Actual"),

ncol = 2, nrow = 2

)

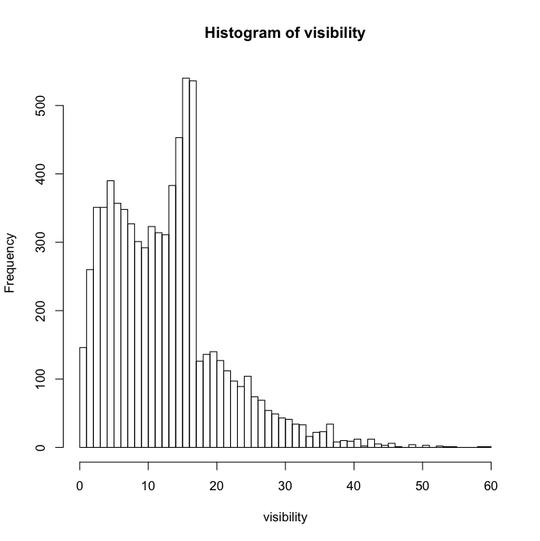

# Plot the empirical distribution of fitted vs actual effects

estimates_dt <- as.data.table(shape_fulldata@data[, c("id", "fitted_u", "gam_spatial_est", "sim_exp", "weights")])

names(estimates_dt) <- c("id", "INLA", "GAM", "Actual", "weights")

estimates_dt <- melt.data.table(data = estimates_dt, id.vars = c("id","weights"),

measure.vars = c("INLA", "GAM", "Actual"),

variable.name = "model_fits", value.name = "estimates")

plot(ggplot(estimates_dt, aes(x = estimates, colour = model_fits, weight = weights)) + geom_freqpoly( bins = 50))

I have provided the charts above (the above two plots help in creating them).