I am solving a clustering problem in which I am running the same algorithm 50 times. I know several scores aimed to select the best k, but I was wondering if there exist any score that measures the consistency of the clusters, i.e., if the clusters returned from different initialisations are the same. My problem is that there is a drawback related with the fact that the label of the clusters is arbitrary.

-

The direct answer to your question: use "external cluster validity indices". They compare two cluster partitions (not necessarily equal k) or a cluster partition with a reference ("true") partition. To read about these indices, you might want to go to my web page and open the description of macro !cluagree (see collection "Compare partitions"). – ttnphns Mar 14 '21 at 21:46

1 Answers

It's true that there is no meaningful 'cluster 1-ness' to the outputted cluster labeled '1'. Nonetheless, I think it's useful to examine a table of your clusters to see how consistent they are. You simply ignore the labels, or whether the numbers run diagonally through the table. It isn't that hard. You can see an example where I do this here: How to use both binary and continuous variables together in clustering?

This works out fine with a small number of clusterings. Your difficulty is that you will have 50. If you wanted to examine how well the clusterings match for all possible pairs within that set of 50, that's ${50 \choose 2} = \frac{50\cdot49}{2} = 1225$ tables! Instead, what you'll need to do is determine a measure of how well two clusterings match, compute it for all possible pairings of clusterings, and then summarize them somehow (determine the mean, or the range, etc.).

There are two measures that come to mind:

- Cramers' $V$, which is a measure of the strength of association / correlation between nominal variables, or

- Determine the probability that two patterns are consistent across the clusterings in that if they are clustered together in one clustering, they will also be in the other, or that they will consistently be clustered distinctly in both clusterings.

I don't know a name for the latter, and I don't know of canned functions to compute it, but it's easy to explain, understand, and code up yourself. On the other hand, Cramers' $V$ is well known and there are functions to compute it1, but people have trouble interpreting the number. (It is the proportion of the way between the least the variables could possibly be association and the most they could possibly be associated.)

To see how this plays out, we can make some data and try it (coded in R):

set.seed(4238) # this makes the example exactly reproducible

# here are 36 data from 4 true clusters in a 3-dimensional space

x1 = c(rnorm(7, mean=3.5, sd=1.2), rnorm(11, mean=2.8, sd=1.4),

rnorm(9, mean=3.9, sd=1.1), rnorm( 9, mean=3.0, sd=1.8) )

x2 = c(rnorm(7, mean=3.6, sd=0.8), rnorm(11, mean=3.8, sd=0.7),

rnorm(9, mean=2.9, sd=0.7), rnorm( 9, mean=2.2, sd=1.2) )

x3 = c(rnorm(7, mean=2.7, sd=0.7), rnorm(11, mean=3.6, sd=1.8),

rnorm(9, mean=2.5, sd=1.9), rnorm( 9, mean=2.9, sd=1.4) )

d = data.frame(x1=x1, x2=x2, x3=x3)

windows() # a scatterplot matrix

pairs(d, col=rep(c("black","red","blue","brown"), times=c(7,11,9,9)),

cex=1.5, pch=rep(1:4, times=c(7,11,9,9)))

apply(d, 2, sd) # the SDs are pretty similar, but we can still standardize them

# x1 x2 x3

# 1.514430 1.230267 1.403837

ds = as.data.frame(lapply(d, scale))

set.seed(6537) # this should make the random starts reproducible for 50 runs

out.clus = vector(length=50, mode="list")

for(i in 1:50){ out.clus[[i]] = kmeans(ds, centers=4, nstart=1) }

# looking at a table for the 1st 2 runs, we see the clusterings are the same

tab12 = table(out.clus[[1]]$cluster, out.clus[[2]]$cluster); tab12

# 1 2 3 4

# 1 0 17 0 0

# 2 0 0 8 0

# 3 3 0 0 0

# 4 0 0 0 8

library(rcompanion) # we'll use this package to get Cramers' V

cramerV(tab12, bias.correct=TRUE)

# Cramer V

# 1

consistent = function(x, y){ # I write my own function to assess consistency

x.mat = matrix(outer(x, x, FUN="=="), nrow=length(x)) # matricies of pairings

y.mat = matrix(outer(y, y, FUN="=="), nrow=length(y))

diag(x.mat) = NA # deleting self pairings

diag(y.mat) = NA

x.vec = as.vector(x.mat) # convert to vectors

y.vec = as.vector(y.mat)

mean(x.vec==y.vec, na.rm=T) # proportion matching

}

consistent(out.clus[[1]]$cluster, out.clus[[2]]$cluster)

# [1] 1

# below I make a dataset with the cluster assignments from all 50 runs

d.clus = as.data.frame(sapply(out.clus, function(l){ l$cluster }))

combs = expand.grid(1:50, 1:50) # all combinations of 1 - 50

combs = combs[-which(combs$Var1==combs$Var2),] # delete self pairings

combs = t(apply(combs, 1, sort)) # sort columns w/i each row

combs = combs[!duplicated(combs),] # drop the duplicates

run.match = vector(length=1225) # to hold the output

for(i in 1:1225){

run.match[i] = consistent(d.clus[,combs[i,1]], d.clus[,combs[i,2]])

}

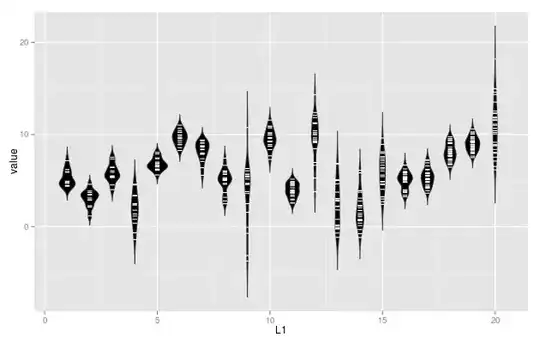

summary(run.match)

# Min. 1st Qu. Median Mean 3rd Qu. Max.

# 0.6365 0.7762 0.8270 0.8426 0.8937 1.0000

1. In R, CV's own @SalMagnifico has a function to do this in his rcompanion package that can compute this, make the bias correction for you and compute a confidence interval for it. There are likely macros for other software.

- 132,789

- 81

- 357

- 650