If we had two confidence intervals overlap for two parameters, can we say that these two parameters are equal to each other? I read in some articles that this doesn't always hold, so what would be the proper confidence interval construction?

-

[This page](https://stats.stackexchange.com/q/18215/28500) discusses this issue extensively. [This answer](https://stats.stackexchange.com/a/18259/28500) has a table illustrating, under reasonable assumptions, the approximate relationship between non-overlap of confidence intervals and p-values for the significance of differences between means. For example, non-overlap of 95% confidence intervals could represent p < 0.005 for the difference in means. – EdM Mar 07 '21 at 22:26

-

Thank you for the suggestions given. I'll go over them and check. – Maybeline Lee Mar 07 '21 at 22:41

2 Answers

Suppose you have $n=20$ observations from $\mathsf{Norm}(\mu = 1,\sigma=1.2),$ as sampled in R below:

set.seed(307)

x = rnorm(20, 1, 1.2)

a = mean(x); a

[1] 0.8973074

s = sd(x); s

[1] 1.218098

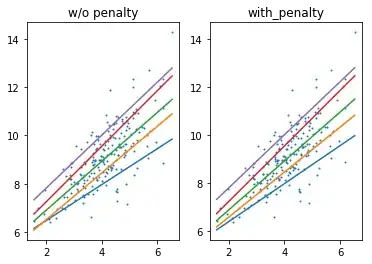

Commonly used 95% CIs for $\mu$ and $\sigma,$ respectively, are $(.327,1.467)$ and $(.926, 1.799),$ which overlap even though $\mu= 1 \ne \sigma = 1.2.$

CI.mu = t.test(x)$conf.int; CI.mu

[1] 0.3272197 1.4673950

attr(,"conf.level")

[1] 0.95

CI.sg = sqrt(19*var(x)/qchisq(c(.975,.025), 19)); CI.sg

[1] 0.9263522 1.7791201

However, for $n = 200$ observations, both confidence intervals are shorter. For my choices of $\mu$ and $\sigma,$ the following sample gives non-overlapping CIs (on either side of $1.1.$

set.seed(2021)

x = rnorm(200, 1, 1.2)

a = mean(x); a

[1] 0.8943602

s = sd(x); s

[1] 1.252382

CI.mu = t.test(x)$conf.int; CI.mu

[1] 0.7197301 1.0689903 # below 1.1

attr(,"conf.level")

[1] 0.95

CI.sg = sqrt(199*var(x)/qchisq(c(.975,.025), 199)); CI.sg

[1] 1.140497 1.388797 # ab0ve 1.1

- 47,896

- 2

- 28

- 76

I'm not sure exactly what you are trying to do. Your asked for a 'proper' kind of CI. I think what's below may be better than comparing two CIs. I'll put this in a separate answer in case it misses your point, and we'll want to delete it.

If you are trying to get a CI for the difference between the two parameters, you might consider a 95% parametric bootstrap confidence interval for the difference and see whether it includes $0.$

Specifically, to distinguish between normal $\mu$ and $\sigma,$ you might do something like the procedure below:

#sample of size n

set.seed(1234)

n = 150

x = rnorm(n, 1, 1.2)

a.obs = mean(x); a.obs; s.obs = sd(x); s.obs

[1] 0.8825818

[1] 1.151906

d.obs = s.obs - a.obs; d.obs

[1] 0.2693245

# parametric bootstrap CI for d = sg - mu

set.seed(4321)

B = 3000; d.re = numeric(B)

for(i in 1:B) {

x.re = rnorm(n, a.obs, s.obs)

d.re[i] = sd(x.re)- mean(x.re) }

q = quantile(d.re, c(.025,.975)); q

2.5% 97.5%

0.04791377 0.48916711

hdr = "Simulated Dist'n of D (SD - Mean)"

hist(d.re, prob=T, col="skyblue2", main=hdr)

abline(v = q, col="red", lwd=2, lty="dotted")

Note: For the normal case there may be a suitable CI or test for the difference between $\sigma$ and $\mu$ that I don't know about. But something similar to the bootstrap above might work, and might work even for non-normal distributions with more than one parameter. This is almost the most elementary type of bootstrap, and for various kinds of data other styles of bootstraps might be more appropriate

- 47,896

- 2

- 28

- 76