I am learning about RNNs especially seq2seq Models that are using LSTM. I am wondering what exactly the Encoder in such models is doing. To ensure that I've understood the rest of the seq2seq-Model using LSTM right, I enumerate what I think it is:

Encoder:

- Gets Input

- Outputs hidden state

Decoder:

- Gets hidden state from Decoder

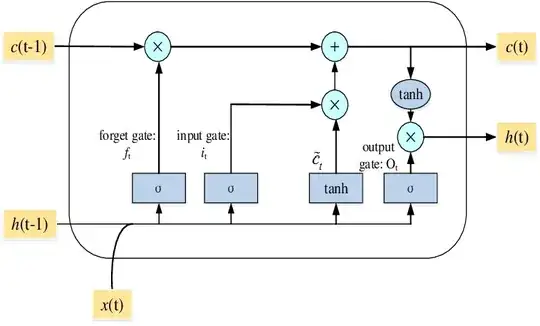

- using hidden state, cell state, previous prediction / previous label (test / train for Teacher Forcing), (and another input, I dont know what - x(t) in the picture below) to make a prediction by doing them into an LSTM-Cell, in which the hidden state and cell state is changed, and a prediction is made. This is performed, until the end of the sentence is predicted.

If I understood this correctly, this LSTM cell is only existent in the Decoder of a seq2seq model that uses LSTM. So that is what inside the Decoder is happening - predicting.

But what happens to the input inside the Encoder? Why is Input coming in and a hidden state coming out? Is there anything else coming out, too? Are the inputs just somehow transformed and added to the hidden state? Are they even used inside it?