I have an imbalanced dataset and am using XGBoost to create a predictive model.

xgb = XGBClassifier(scale_pos_weight = 10, reg_alpha = 1)

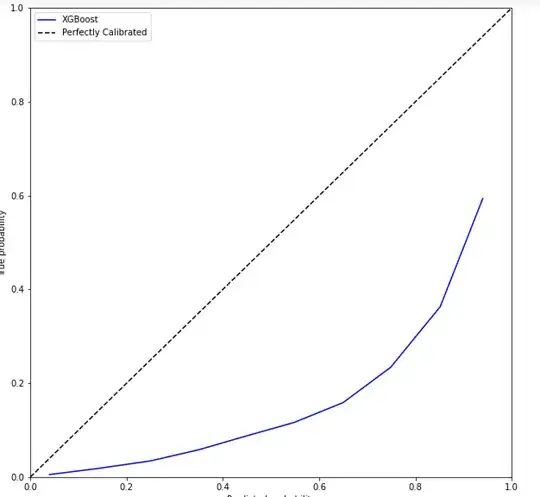

Although my recall and specificity are acceptable, I would like to improve the calibration curve. When I try using isotonic regression to calibrate my model, my predictive performance (recall and specificity) decrease dramatically. Any thoughts on how to maintain my metrics while improving calibration.

calibrated = CalibratedClassifierCV(xgb, X_train, y_train, cv=5)

calibrated.fit(X_train, y_train)

scores = cross_val_score(calibrated, X_test, y_test, cv = 5, scoring ='recall')

print(np.mean(scores))

#prints 0.17

xgb.fit(X_train, y_train)

scores = cross_val_score(xgb, X_test, y_test, cv = 5, scoring ='recall')

print(np.mean(scores))

#prints 0.74

Thanks in advance!