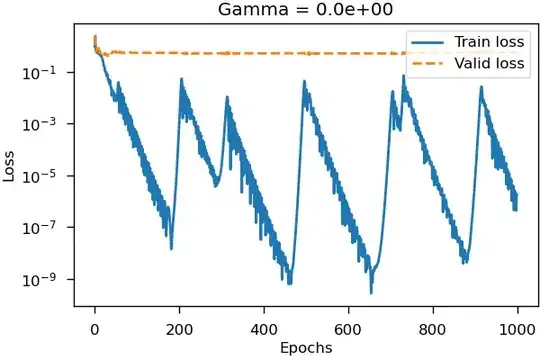

I get the following loss behavior when training multilayer perceptron with mean squared error loss on some synthetic data using Adam with learning rate 1e-1.

As far as I can say from reading, for example, Training loss increases with time, the increase can be attributed to the learning rate being too large such that optimizer pushes the model outside the minimum. However, what I do not understand, is that the second minimum (around 450th epoch) is significantly lower than the 1st minimum (at ~200th epoch).

Could you please point me where should I read about such behavior and why it occurs? Thank you!