I've ran the following code on google colaboratory. Succintly, I've used some housing prices for a typical regression problem, and then trained the same simple neural network, but with different Cross-Validation methods: KFold with no shuffling, KFold with suffling, and a Shuffle and Split.

I ran them 20 times, while computing the out-of-sample mean squared prediction error(it's also the loss function I've used for the neural network).

These were the results I got...

These results seem to indicate something odd, that when I shuffle, the variance in the out-of-sample performance increases, when it was supposed to decrease, according to this answers, and others therein linked. You may say that a simple plot for 20 simulations maybe very little, but the code is very slow to run, even on Colab. I'm trying to improve it, but in the meanwhile, I've started a run with 100 simulations, and maybe later today I'll get the results.

Am I doing something wrong? Why is shuffling creating more variance?

Edit: here's an update. The Colab has finished running the 100 sims, and the variances are

np.var(listKFFalse),np.var(listKFTrue),np.var(listSS)

(6228.193219510661, 16835.90109036969, 5500.073516188342)

The cv with ShuffleSplit had the smallest variance, mostly due to two sims (one in KFold with shuffle and the other in KFold without shuffle) which sent their variances sky high... If we were to consider those sims as outliers the ranking would change completely. Still, I was expecting a completely different behaviour, from reading some of the answers in CV.SE.

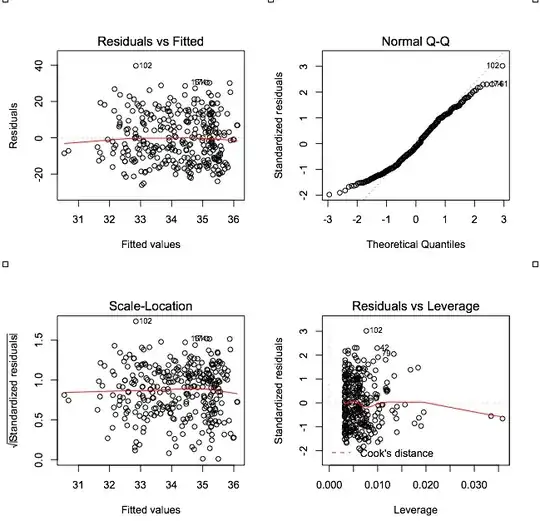

Edit 2: I've added to my colab file 2 new sections: i) simple Neural Network with only 10 units per layer (maybe 64 was overfitting right from the start), however, the results are now even worse. ii) A Ridge regression(to have some regularisation like the NN and the epochs) with CV (but no shuffling). The Ridge gives much superior results... Frankly, I'm baffled. =D