This is along the lines you started to try.

$\sum{X_i}$ is binomial with parameters $p$ and $mn$.

The likelihood function is

$$f(m)$$

$$=\frac{\Gamma(m+n+1)}{\Gamma(m+n-\sum{X_i}+1)\Gamma(\sum{X_i}+1)}p^{\sum{X_i}}(1-p)^{mn-\sum{X_i}}$$

for $\max{X_i}\le m$.

Start by treating the likelihood function as if it were a function of a continuous variable $m \in (\max{X_i},\infty)$.

The derivative of the log of the likelihood is

$$f'(m)=\log{(1-p)}+\psi (mn+1)-\psi{\left(mn-\sum{X_i}+1\right)}$$

where $\psi$ is the digamma function.

This simplifies to

$$f'(m)=\log{(1-p)}+\frac{1}{mn-\sum{X_i}+1}+...+\frac{1}{mn}$$

The second derivative is

$$f''(m)=\psi^{(1)} (mn+1)-\psi^{(1)}{\left(mn-\sum{X_i}+1\right)}$$

where $\psi^{(1)}$ is the polygamma function.

This simplifies to

$$f''(m)=-\frac{1}{\left(mn-\sum{X_i}+1 \right)^2}-...-\frac{1}{(mn)^2}$$

The second derivative is always negative.

That means the derivative is a decreasing function.

Also, $f'(m)\rightarrow \log{(1-p)}<0$ as $m\rightarrow \infty$ because there are a fixed, finite number of other terms in $f'(m)$ and each of those other terms converge to 0.

If the derivative at the lowest possible $m$, that is at $\max{X_i}$, is less than or equal to 0, then the mle is $\max{X_i}$. On the other hand, if $f'(\max{X_i})>0$, then let $M$ be the largest integer such that $f'(M)>0$. The mle is either $M$ or $M+1$. Just plug both of them into the likelihood function to see which of them makes $f$ bigger.

Note that

a) $\left(\max{X_i},\sum{X_i} \right)$ is a sufficient statistic

b) $$f'(m)=\log{(1-p)}+\sum_{j=1}^{\sum{X_i}}\frac{1}{mn-\sum{X_i}+j}\approx \log{(1-p)}+\int_{mn-\sum{X_i}+0.5}^{mn+0.5}x^{-1}dx$$

$$=\log{(1-p)}+\log{(mn+0.5)}-\log{(mn-\sum{X_i}+0.5)}$$

and the approximate solution to $f'(m)=0$ is therefore $m\approx \frac{\sum{X_i}-0.5 p}{n p}$.

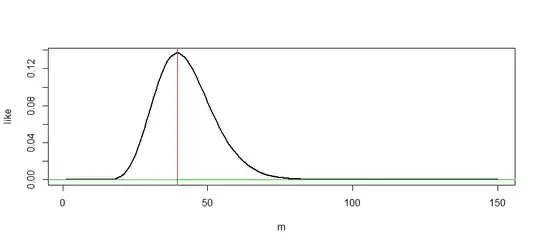

Here are some examples from simulated data using the following R program:

mlem=function(X,p) {

sX=sum(X)

mtry=mX=max(X)

if ((log(1-p)+sum(1/c((mtry*n+sX+1):(mtry*n))))<0) {

mle=mtry

mtry=mtry+1

} else {

while ((log(1-p)+sum(1/c((mtry*n-sX+1):(mtry*n))))>0) mtry=mtry+1

if (dbinom(sX,n*(mtry-1),p)>dbinom(sX,n*mtry,p)) mle=mtry-1 else mle=mtry

}

#return the mle, max Xi, Max integer M with f'(M)>0, approximate solution

return(c(mle,mX,mtry-1,round((sX-0.5*p)/(length(X)*p))))

}

set.seed(123)

for (i in 1:3) {

p=runif(1)

m=30+round(20*runif(1))

n=10+round(10*runif(1))

X=rbinom(n,m,p)

mle=mlem(X,p)

print(c(m,n,p))

print(X)

print(mlem(X,p))

}

The first line shows the true values of the parameters used to simulate the data. The second line shows the simulated values of $X_i$:

Example 1.

m=46 n=14 p=0.2875775

17 18 8 13 17 14 13 19 13 15 14 9 17 11

mle= 49, M= 49

Example 2.

m=37 n=20 p=0.04205953

3 2 2 5 2 2 2 2 1 0 4 3 2 2 0 1 2 1 1 1

mle=45, M=45

Example 3.

m=38 n=14 p=0.1428

5 3 3 4 5 4 8 2 5 7 3 6 4 3

mle=31, M=30

These examples show that the mle is not always equal to $M$ or always equal to $M+1$ where $M$ is the largest integer with $f'$ positive; it can be either of those. In all three of those examples, the mle was the nearest integer to $\frac{\sum{X_i}-0.5 p}{n p}$.

Sometimes, the mle is equal to $\max{X_i}$ as in this example simulated from data with $M=10$, $N=20$, and $p=0.8$:

9 7 8 6 6 10 8 6 8 8 6 8 8 8 10 6 9 10 9 6

Here, $f'(10)\approx -0.104$ and so the mle is $10$. Again in this example, it turns out that the mle is the nearest integer to $\frac{\sum{X_i}-0.5 p}{n p}$.

Summary:

If $f'(\max{X_i})<0$, then the mle is $\max{X_i}$.

Otherwise, find the largest integer $M$ such that $f'(M)>0$ using the formula above. $f'(x)$ is a decreasing function that goes to $-\infty$ as $x\rightarrow \infty$, so it is guaranteed that such an $M$ can be found. That $M$ will be close to $\frac{\sum{X_i}-0.5 p}{n p}$. Either $M$ or $M+1$ will be the mle.