I am trying to understand the ArcFace Implementation and I am stuck at one condition.

If the $ \cos(t) > \cos(\pi -m)$ then $t + m > \pi$. In this case the way how we're computing $\cos(t+m)$ is changed into $cos(t+m) = \cos(t) - m * \sin(m)$. Could you explain this step?

I was looking for the solution and I've found in the github issue that suggests, the term $\cos(t) - m*\sin(m)$ is the Taylor expansion of $\cos(t+m)$ but still I don't understand the benefit real of that. If we're using the Taylor expansion then $cos(t) - m*sin(m)$ is an approximation of $\cos(t+m)$, so what is the advantage?

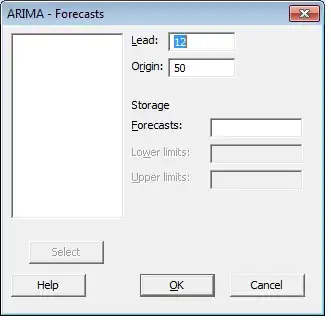

Here is the fragment of the code on which I based:

def call(self, embds, labels):

self.cos_m = tf.identity(math.cos(self.margin), name='cos_m')

self.sin_m = tf.identity(math.sin(self.margin), name='sin_m')

self.th = tf.identity(math.cos(math.pi - self.margin), name='th')

self.mm = tf.multiply(self.sin_m, self.margin, name='mm')

normed_embds = tf.nn.l2_normalize(embds, axis=1, name='normed_embd')

normed_w = tf.nn.l2_normalize(self.w, axis=0, name='normed_weights')

cos_t = tf.matmul(normed_embds, normed_w, name='cos_t')

sin_t = tf.sqrt(1. - cos_t ** 2, name='sin_t')

cos_mt = tf.subtract(

cos_t * self.cos_m, sin_t * self.sin_m, name='cos_mt')

cos_mt = tf.where(cos_t > self.th, cos_mt, cos_t - self.mm)

mask = tf.one_hot(tf.cast(labels, tf.int32), depth=self.num_classes,

name='one_hot_mask')

logists = tf.where(mask == 1., cos_mt, cos_t)

logists = tf.multiply(logists, self.logist_scale, 'arcface_logist')

return logists

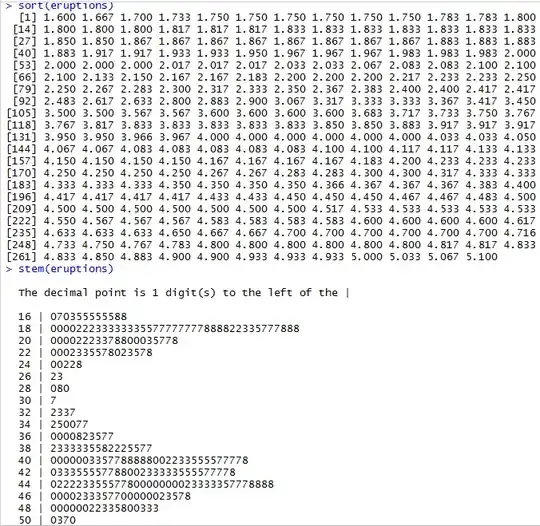

I've checked how the network will perform with this condition and when assign $\cos(t)$ when $t + m > \pi$. I've trained the network with ArcLoss on MNIST with embedding size = 2 and I've plotted the embeddings. The plots are really similar and I cannot observe the impact of using $cos(t) - m * \sin(m)$ instead of $\cos(t)$.

I understand (and see) that we should modify the matrix cos_mt when $m+t > \pi$ but I don't understand why it's done in that way. Could you help me?