You have to throw it at least 1000 times to have a positive probability of 100 for each. The probability is always less than 1 no matter how many time you throw the die.

Suppose I throw the die $N \ge 1000$ times and let $X_i$ denote the number of times side $i$ is observed. $(X_1,...X_{11})$ has a multinomial distribution.

$$P[X_1 \ge 100, X_2 \ge 100, ...X_{10} \ge 100]$$

$$=\sum_{x_1=100}^{N-900}\sum_{x_2=100}^{N-800-x_1}\sum_{x_3=100}^{N-700-x_1-x_2}...\sum_{x_{10}=100}^{N-\sum_{j=1}^9x_j}P[X_1 =x_1, X_2 =x_2, ...X_{10} =x_{10}]$$

$$=\sum_{x_1=100}^{N-900}\sum_{x_2=100}^{N-800-x_1}\sum_{x_3=100}^{N-700-x_1-x_2}...\sum_{x_{10}=100}^{N-\sum_{j=1}^9x_j}\frac{N!}{x_1!x_2!...x_{10}!(N-\sum_{k=1}^{10}x_k)!}p_1^{x_1}p_2^{x_2}...p_{10}^{x_{10}}p_{11}^{N-\sum_{k=1}^{10}x_k}$$

You definitely do not want to try calculating that unless N is close to 1000.

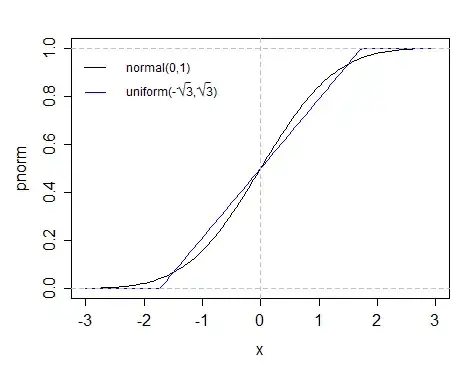

For large $N$, $(X_1,...X_{10})$ is approximately multivariate normal with mean $(0.01 N, ..., 0.01 N)$ and variance matrix that has $0.01\times N\times(1-0.01)$ on the diagonal and $-0.01^2N$ off diagonal. see here

With $N=11600$, there is about 50% chance of having observed 100 of each face from 1-10.

R program:

library(mvtnorm)

N=11600

sigma=matrix(N*sqrt(0.01*0.01)*(0-sqrt(0.01*0.01)),ncol=10,nrow=10)

diag(sigma)=N*sqrt(0.01*0.01)*(1-sqrt(0.01*0.01))

as.numeric(pmvnorm(lower=rep(100,10),upper=rep(Inf,10),mean=rep(0.01*N,10),sigma=sigma))

For 95% chance, use $N=12900$

For 99% chance, use $N=13600$