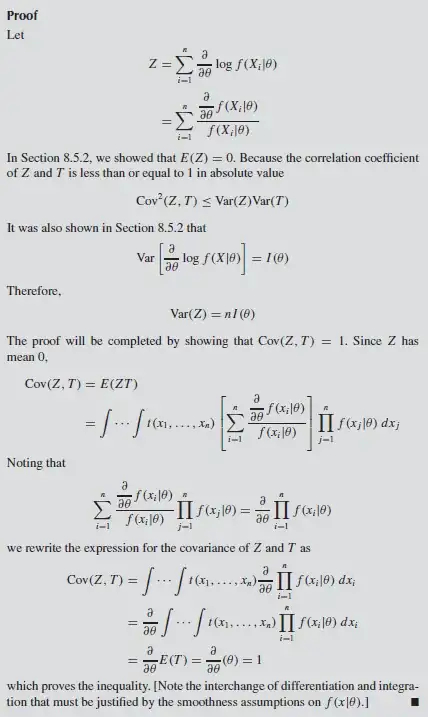

I am slightly confused at the last step of the Cramer Rao Lower Bound Proof in John Rice's Mathematical Statistics and Data Analysis.

In the last step, it is used that $E[T] = \theta$. I was under the impression that the expectation of an unbiased estimator $T$ was the true paramater of the distribution $\theta_0$, and therefore $\frac{\partial}{\partial \theta} E[T] = \frac{\partial}{\partial \theta} \theta_0 = 0$. In so many of the proofs in this chapter, care is taken to distinguish $\theta_0$ and $\theta$.

Could someone explain why here $E[T] = \theta$?