The SciPy function in Python, ttest_ind() by default works with the $t$-test that assumes equal variances. There is a parameter, equal_var = False that switches it to the Welch test where equal variances in the two samples are not assumed.

This seems to suggest that the Welch test should perform better when the two samples have different variances by design. So, I set out to put this to the test. Surprisingly, the conclusion I got was the exact opposite.

from scipy.stats import norm, ttest_ind

a1 = norm.rvs(10, 14, size = 6)

a2 = norm.rvs(13, 3, 100)

p_val1 = ttest_ind(a1, a2)[1]

p_val2 = ttest_ind(a1, a2, equal_var = False)[1]

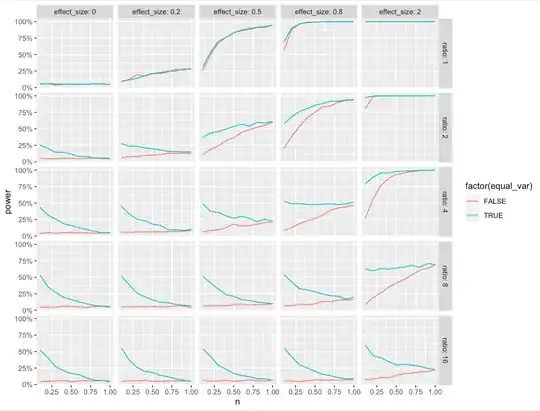

Here, we generate 6 samples from a normal distribution with mean 10 and standard deviation 14. Then 100 samples from another normal distribution with mean 13 and standard deviation 3. It's pretty clear that the two samples have unequal variance. The first $p$-value is from the simple $t$-test that assumed equal variance while the second one is from the Welch test. The first $p$-value is consistently less than 1% while the second one is generally about 30-40%. And since the means are in-fact different, the Welch test is underperforming. One critique here is that I didn't consider the false positive rate, only power. This is rectified in the plot below, which plots the false positive to false negative rates of the two tests, hence considering both.

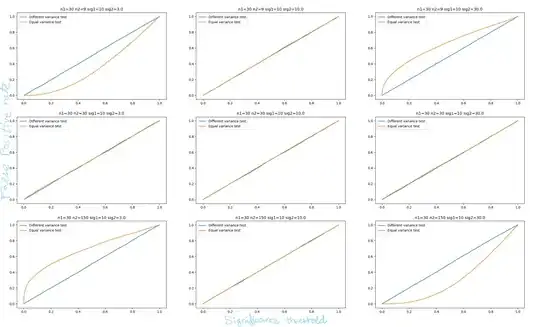

This can be visualized in the alpha-beta profile of the two tests (false positive rate plotted with false negative rate). The two-sample $t$-test has much lower false negative rates than the Welch test (plotted in blue).

Why is the Welch test beaten so handily? Are there other conditions where it might outperform the two sample $t$-test? If not, why ever use it?

EDIT: The plot below had a bug. The actual performance of the two tests is the same in terms of statistical power. See my answer for the corrected plot.