I have a logistic regression with three independent variables. The correlation coefficients between the three variables are:

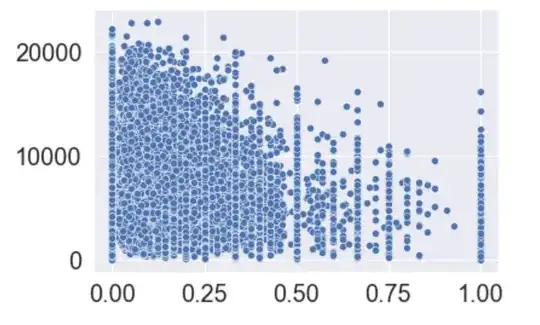

For two of the variables with correlation coef of 0.1, if you do a scatter plot definitely a relationship - let's call these variables X2 and X3.

I built a logistic regression of the form:

Y = X1 + X2 + X3 + X2 * X3

X1 and X2 are significant, X3 and X2X3 are not.

However, if I transform X3, by taking the log such that:

Y = X1 + X2 + log(X3) + X2 * log(X3)

X2 no longer is significant while log(X3) is. In other words, X1 and log(X3) are significant, but X2 and X2 * log(X3) are not.

The only thing I've read is that if variables are highly correlated, the significance could change. But in this case, it does not seem like the variables are highly correlated. Are there any other explanations for such a change in significance. The z-values (extracted from statsmodels in python) are at least beyond 3.5 when a coefficient is significant. So it's not 'barely' significant. I've also checked the correlation coefficent after transformation and it doesn't change much. -.25 drops to -.18 and .1 drops to .07