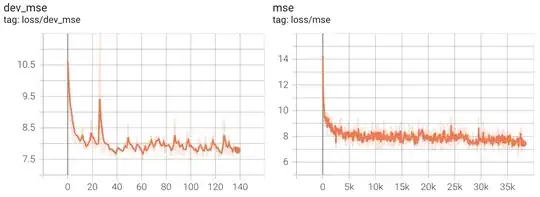

I am training a DNN (CNN + RNN) for a voice conversion task. Although my train loss can be very low with good performance, I believe I am experiencing massive overfitting. To overcome this, I have already added quite a bit of batch norm and dropout inside the model as well as weight decay — however, the model still continues to overfit a lot. I present some of my loss curves below:

With a weight decay constant of 1e-7:

With a weight decay constant of 1e-2:

Note that I noticed that if the weight decay constant is > 1e-4, the model seems to experience underfitting.

Note that I noticed that if the weight decay constant is > 1e-4, the model seems to experience underfitting.

I want to know what else can I do to improve this model's generalization. Is it just a matter of more data, or do I need to modify my DNN architecture in some way. I have been struggling with this overfitting problem for some days now, and any insight would be a help.