I have a real-world problem with severe imbalanced classes. I was able to get a good AUC and balanced accuracy after the implementation of a resampling technique. Now I want to "walk over the ROC" and improve precision, even if this costs me some recall points. Due to practical reasons, I want to be more certain about the labels I give to the class of interest (the rare event).

Usually, I would just increase the cut-off required for an observation to be considered to belong to the interest group. As the classification models default the cut-off of 0.5, I would use, for example, 0.75.

However, I noticed that the model I am using is not using the 0.5 cut-off and I am now unsure of simply generating new predictions from a new cut-off. The following is a replicable example:

from sklearn.model_selection import train_test_split

from imblearn.combine import SMOTETomek

from sklearn.datasets import make_classification

import numpy as np

from imblearn.pipeline import Pipeline

from imblearn.under_sampling import TomekLinks

from sklearn.svm import SVC

import pandas as pd

X, y = make_classification(n_samples=1000, n_classes=2, weights=[0.85], flip_y=0, random_state=1)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=101)

steps = [('over', SMOTETomek(tomek=TomekLinks(sampling_strategy='majority'))),

('model', SVC(probability=True))]

pipeline = Pipeline(steps=steps)

pipeline.fit(X_train, y_train)

y_pred=pipeline.predict(X_test)

y_scores = pipeline.predict_proba(X_test)

myprediction=np.where(y_scores[:,1]>0.5,1,0)

df = pd.DataFrame({'y_pred':y_pred, 'myprediction':myprediction, 'prob':y_scores[:,1],

'compare': [i==j for i, j in zip(y_pred,myprediction)]})

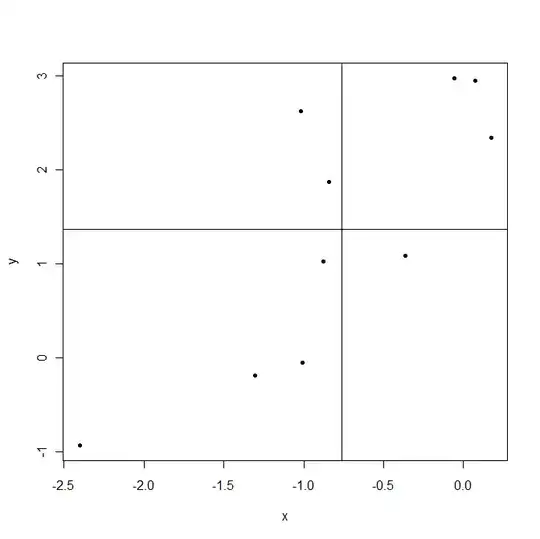

df[~df.compare]

I mean, why are there observations with predict probability of 0.33 being classified as belonging to the class of interest? Do I need to adjust the predict probabilities after resampling? How can I do that?