What you are missing is information about a study's design. Independence is somehing that comes from the study design - it is NOT implied by homoscedasticity.

To give you a simple example, imagine that you are measuring temperature (degrees Celsius) at your local airport using a sensor, with the goal being to see how temperature changes over time. If you measure temperature every day (once per day, say at noon), then you can expect the resulting daily values of temperature to be correlated with each other if they come from days which are close to each other. If you aggregated the daily temperature values collected within each year to get a yearly temperature value, then it is possible that the yearly temperature values may no longer be correlated over time (because the temporal distance of one year between consecutive values is large enough).

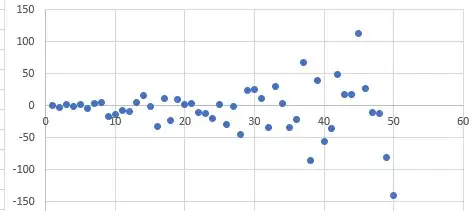

Staying with this example of measuring a response variable (e.g., temperature) over time (e.g., every year) and trying to regress that variable against time, you can expect to encounter all possible combinations of situations, depending on what variable you are measuring and how frequent your measurements are:

(1) independent errors and homoscedasticity;

(2) independent errors and heteroscedasticity;

(3) temporally dependent errors and homoscedasticity;

(4) temporally dependent errors and heteroscedasticity.

This fact alone hints that you are wrong to assume that independence implies homoscedasticity; if that were the case, we would never encounter situation (2) in practice. However, the statistical literature is full of examples to the contrary!

@whuber already hinted that you are confusing two distinct concepts: dependence of errors and variance of errors.

In the context of the temperature versus time example, the variance of errors simply quantifies the amount of spread you can expect to encounter in your temperature values about the underlying temporal trend in these values. Under the assumption of homoscedasticity, the amount of spread is unaffected by the passage of time (i.e., it remains constant over time). However, under the assumption of heteroscedasticity, the amount of spread is affected by the passage of time - for example, the amount of spread can increase over time. Spread is about how far you can expect an observed temperature value to be at time $t$ relative to what is 'typical' for that time $t$.

The dependence of errors looks at something different altogether: if you know something about the value of temperature at the current time $t$, does that tell you anything about the value of temperature at time $t + 1$? If the temperature values are independent from each other, knowing that temperature is high today should have no bearing on what the temperature value will be tomorrow. If the temperature values are NOT independent from each other (e.g., they are positively correlated), knowing that temperature is high today will tell you that the temperature value will also be high tomorrow. How high it will be will depend on the strength of the correlation.