Throughout this post, I assume at least second moments exist. Consider a heterogeneous linear treatment effect model of the form:

$$Y_i = \alpha_i + \beta_i X_i$$

where $\alpha_i, \beta_i$ are potentially arbitrarily heterogenous (in other words, we make no restrictions on heterogeneity in the potential outcomes function, but we do restrict it to be linear). Suppose additionally, that $X_i$ is exogenous/comes from an experiment in the sense that $$X_i \perp \alpha_i, \beta_i$$

Then standard arguments show that $\mathbb E[\alpha_i], \mathbb E[\beta_i]$ are identified by the (population) OLS with $Y_i$ as the outcome and $X_i$ as the covariate. Interestingly enough, we also have that $\mathbb C\mathrm{ov}(\alpha_i, \beta_i)$ is identified under the additional assumption that $X_i$ is symmetrically distributed around its mean. To see why, note that if we define $\tilde X_i = X_i - \mathbb E[X_i]$, we have

$$Y_i^2 \tilde X_i = \alpha_i^2 \tilde X_i + 2\alpha_i \beta_i \tilde X_i^2 + \beta_i^2 X_i^2 \tilde X_i$$

Noting that the first and third term vanish in expectation due to exogeneity and the symmetric distribution of $X_i$, only the third term is left. In particular, we can show that

$$\mathbb C\mathrm{ov}(\alpha_i, \beta_i) = \frac{\mathbb E\left[Y_i^2 \tilde X_i\right]}{2\mathbb E\left[\tilde X_i^2\right]} - \mathbb E[\alpha_i]\mathbb E[\beta_i]$$

where everything above is either directly observed or identified (in particular, the first term on the RHS is half the (population) OLS slope of the regression of $Y_i^2$ on $X_i$). Consider now, the case where I have two outcomes with their own linear-in-treatment potential outcomes:

$$Y_{i,1} = \alpha_{i,1} + \beta_{i,1} X_i$$ $$Y_{i,2} = \alpha_{i,2} + \beta_{i,2} X_i$$

Again, I am assuming that $X_i$ is exogenous in the sense that $X_i \perp \left\{\alpha_{i,j}, \beta_{i,j}\right\}_{j=1}^2$. My question is therefore the following. Can we identify $\mathbb C\mathrm{ov}\left(\alpha_{i,1}, \beta_{i,2}\right)$ in this model without making additional restrictions? The obvious generalization of the above argument does not seem to work here because

$$Y_{i,1} Y_{i,2} \tilde X_i = \alpha_{i,1}\alpha_{i,2} \tilde X_i + \alpha_{i,1}\beta_{i,2} \tilde X_i^2 + \alpha_{i,2}\beta_{i,1} \tilde X_i^2 + \beta_{i,1}\beta_{i,2} X_i^2 \tilde X_i$$

which does not allow us to separate out $\mathbb E\left[\alpha_{i,1}\beta_{i,2} \right]$ and $\mathbb E\left[\alpha_{i,2}\beta_{i,1} \right]$. It seems also that going to higher cross moments involving $Y_{i,1}, Y_{i,2}$ is unlikely to help either, as we end up introducing even more terms that cannot be separately identified. I am wondering if anybody has formally shown that what I am conjecturing here is true.

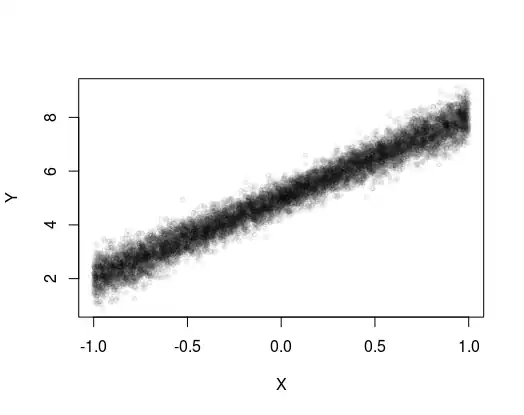

Edit: By the suggestion in the comments, here is some R code simulating the DGP I have in mind.

set.seed(12351)

# Set up standard normal variables

norm1 <- rnorm(100000)

norm2 <- rnorm(100000)

# Set up covariance matrix between alphas and betas

# Cov(alpha, beta) = 0.05, Var(alphas) = Var(betas) = 0.1

VCV <- matrix(c(0.1, 0.02, 0.02, 0.1), nrow = 2, ncol = 2)

# Draw individual alphas, betas from population where

# E[alphas] = 5, E[betas] = 3, and VCV(alpha, beta) = VCV as above

alphas <- 5 + sapply(1:100000, function(i) (chol(VCV) %*% c(norm1[i], norm2[i]))[1])

betas <- 3 + sapply(1:100000, function(i) (chol(VCV) %*% c(norm1[i], norm2[i]))[2])

# Independently sample Xs

Xs <- 2 * (rbinom(100000, 1, 0.5) - 0.5)

# Define Ys according to individual parameters (alpha, beta) and treatment (X):

Ys <- alphas + betas * Xs

# Run estimators corresponding to the moment equalities from the question

lmod <- lm(Ys ~ Xs)

lmod2 <- lm(Ys^2 ~ Xs)

# Moments for underlying parameters

mean(alphas)

# [1] 4.999713

mean(betas)

# [1] 3.000854

cov(alphas,betas)

# [1] 0.01947037

# As expected, intercept is approximately E[alpha], intercept is

# approximately E[beta], and half the OLS slope of

# Ys^2 ~ Xs minus slope times intercept from Ys ~ X is roughly

# Cov(alphas, betas)

summary(lmod)

# Call:

# lm(formula = Ys ~ Xs)

#

# Residuals:

# Min 1Q Median 3Q Max

# -2.19008 -0.29728 0.00006 0.29893 2.65507

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 4.998635 0.001414 3536 <2e-16 ***

# Xs 3.001279 0.001414 2123 <2e-16 ***

# ---

# Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

#

# Residual standard error: 0.447 on 99998 degrees of freedom

# Multiple R-squared: 0.9783, Adjusted R-squared: 0.9783

# F-statistic: 4.507e+06 on 1 and 99998 DF, p-value: < 2.2e-16

as.numeric(lmod2$coefficients[2] / 2 - lmod$coefficients[1] * lmod$coefficients[2])

# [1] 0.01906454

```