This is my second data science project and i am looking for insights from people more experienced than me. I am completely self-taught and new to this and would appreciate any kind of feedback.

The first project I did was about predicting which artist had painted a painting. This new project is something different, its tabular data and the first was images.

I am trying to predict the price at fine art auctions.

I am classifying the price as unsold, below the auction house price range, in the range, or above the range.

I have scraped the data from swedish auction houses.

The data is made up of

- The price

- The title of the art work

- A description of the art work

- The name of the artist

- The auction house estimated price range

- Artist's country

- Artist's lifetime

- The Provenance (i.e. information about who as owned the painting earlier and such things)

- Exhibition information

After cleaning the data and keeping only those records where the artist.value_counts >= 15, i.e. the artist have had at least 15 items at auction, i end up with about in total 12.000 items sold.

Investigating the data, these are the features i find:

String categorical features

- artist name

- artist country

- the currency of the sold item

Integer categorical features

- exh (Exhibited or not)

- prov (Provenance or not)

- desc (Description or not)

- tit (Title or not)

- signed (Whether the painting is signed or not)

- dated (Whether the painting is dated)

- dyear (If its dated, what year)

- w_in_title (If the title contains words like summer, winter, girl or stockholm)

- medium (If its a oil painting, drawing, gouach, etching, lithograph etc)

Numerical features

- birth year

- death year

- low (The low end of the auction house estimated price)

- high (The high end of the auction house estimated price)

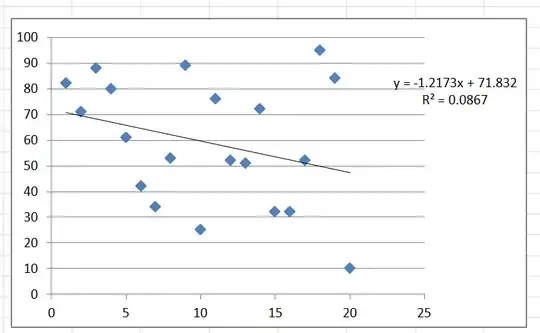

Correlation matrix:

Are the correlations with price too low?

Then on the numerical features I perform a power transformation to make the data more gaussian like, a quantile transformation - spreading out the most frequent values and reducing the impact of outliers and finally i remove the mean and scale it to unit variance.

Then some tensorflow preprocessing layers

from tensorflow.keras.layers.experimental.preprocessing import Normalization

from tensorflow.keras.layers.experimental.preprocessing import CategoryEncoding

from tensorflow.keras.layers.experimental.preprocessing import StringLookup

def encode_numerical_feature(feature, name, dataset):

# Create a Normalization layer for our feature

normalizer = Normalization()

# Prepare a Dataset that only yields our feature

feature_ds = dataset.map(lambda x, y: x[name])

feature_ds = feature_ds.map(lambda x: tf.expand_dims(x, -1))

# Learn the statistics of the data

normalizer.adapt(feature_ds)

# Normalize the input feature

encoded_feature = normalizer(feature)

return encoded_feature

def encode_string_categorical_feature(feature, name, dataset):

# Create a StringLookup layer which will turn strings into integer indices

index = StringLookup()

# Prepare a Dataset that only yields our feature

feature_ds = dataset.map(lambda x, y: x[name])

feature_ds = feature_ds.map(lambda x: tf.expand_dims(x, -1))

# Learn the set of possible string values and assign them a fixed integer index

index.adapt(feature_ds)

# Turn the string input into integer indices

encoded_feature = index(feature)

# Create a CategoryEncoding for our integer indices

encoder = CategoryEncoding(output_mode="binary")

# Prepare a dataset of indices

feature_ds = feature_ds.map(index)

# Learn the space of possible indices

encoder.adapt(feature_ds)

# Apply one-hot encoding to our indices

encoded_feature = encoder(encoded_feature)

return encoded_feature

def encode_integer_categorical_feature(feature, name, dataset):

# Create a CategoryEncoding for our integer indices

encoder = CategoryEncoding(output_mode="binary")

# Prepare a Dataset that only yields our feature

feature_ds = dataset.map(lambda x, y: x[name])

feature_ds = feature_ds.map(lambda x: tf.expand_dims(x, -1))

# Learn the space of possible indices

encoder.adapt(feature_ds)

# Apply one-hot encoding to our indices

encoded_feature = encoder(feature)

return encoded_feature

Then I build a keras model, after some trial and error, and run keras tuner to tune the hyperparameters.

hp = HyperParameters()

def model_builder(hp):

x = Dense(units=256, kernel_regularizer=keras.regularizers.L2(l2=0.01),

kernel_initializer=keras.initializers.HeNormal(), kernel_constraint=keras.constraints.UnitNorm(axis=0),

name = "Dense1")(all_features)

x = PReLU()(x)

x = BatchNormalization()(x)

x = Dropout(rate=hp.Float('dropout1',

min_value=0.0,

max_value=0.2,

default=0,

step=0.02))(x)

x = Dense(units=hp.Int('units2', 0, 128, step=16), kernel_regularizer=keras.regularizers.L2(l2=0.01),

kernel_initializer=keras.initializers.HeNormal(), kernel_constraint=keras.constraints.UnitNorm(axis=0),

name = "Dense2")(x)

x = PReLU()(x)

x = BatchNormalization()(x)

x = Dropout(rate=hp.Float('dropout2',

min_value=0.0,

max_value=0.2,

default=0,

step=0.02))(x)

x = Dense(units=hp.Int('units3', 0, 128, step=16), kernel_regularizer=keras.regularizers.L2(l2=0.01),

kernel_initializer=keras.initializers.HeNormal(), kernel_constraint=keras.constraints.UnitNorm(axis=0),

name = "Dense3")(x)

x = PReLU()(x)

x = BatchNormalization()(x)

x = Dropout(rate=hp.Float('dropout3',

min_value=0.0,

max_value=0.2,

default=0,

step=0.02))(x)

x = Dense(units=hp.Int('units4', 0, 64, step=8), kernel_regularizer=keras.regularizers.L2(l2=0.01),

kernel_initializer=keras.initializers.HeNormal(), kernel_constraint=keras.constraints.UnitNorm(axis=0),

name = "Dense4")(x)

x = PReLU()(x)

x = BatchNormalization()(x)

x = Dropout(rate=hp.Float('dropout4',

min_value=0.0,

max_value=0.2,

default=0,

step=0.02))(x)

x = Dense(units=hp.Int('units5', 0, 32, step=4), kernel_regularizer=keras.regularizers.L2(l2=0.01),

kernel_initializer=keras.initializers.HeNormal(), kernel_constraint=keras.constraints.UnitNorm(axis=0),

name = "Dense5")(x)

x = PReLU()(x)

x = BatchNormalization()(x)

x = Dropout(rate=hp.Float('dropout5',

min_value=0.0,

max_value=0.2,

default=0,

step=0.02))(x)

output = Dense(4, activation="softmax", name = "Outputlayer")(x)

model = keras.Model(all_inputs, output)

model.compile(optimizer=keras.optimizers.Adam(

hp.Choice('learning_rate',

values=[1e-1, 1e-2, 1e-3])),

loss=tf.keras.losses.sparse_categorical_crossentropy,

metrics=['accuracy'])

return model

A view of the model:

checkpoint = ModelCheckpoint('./checkpoints9/best_weights.tf', monitor='val_accuracy', verbose=1, save_best_only=True, mode='auto')

reduce_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.5, patience=10, verbose=1, min_delta=1e-4, mode='min')

earlyStopping = EarlyStopping(monitor='val_loss', patience=30, verbose=0, mode='min')

tuner = BayesianOptimization(

model_builder,

max_trials=200,

executions_per_trial=2,

hyperparameters=hp,

tune_new_entries=True,

allow_new_entries=True,

beta = 4,

seed = 323,

directory = "/content/drive/MyDrive/output",

project_name = "Auction",

objective='val_accuracy')

tuner.search(train_ds,

validation_data=val_ds,

verbose=1,

epochs = 100,

callbacks = [checkpoint, reduce_lr, earlyStopping])

Training one of the "best" models.

Epoch 1/300

244/244 [==============================] - 7s 14ms/step -

loss: 6.3068 - accuracy: 0.3475 - val_loss: 6.1117 - val_accuracy: 0.3175

It improves slightly

Epoch 35/300

244/244 [==============================] - 3s 11ms/step -

loss: 6.0440 - accuracy: 0.4093 - val_loss: 6.0420 - val_accuracy: 0.4054

Epoch 300/300

244/244 [==============================] - 3s 11ms/step -

loss: 5.9001 - accuracy: 0.5058 - val_loss: 6.0521 - val_accuracy: 0.4117

Epoch 00300: val_accuracy did not improve from 0.42556

Confusion matrix:

So obviously it is awful.

Any ideas on what I can improve?

I am new to this so any comment, critique or suggestion you may have, however small, I am interested to hear about it.

(If you want me to add anything to the above, let me know.)