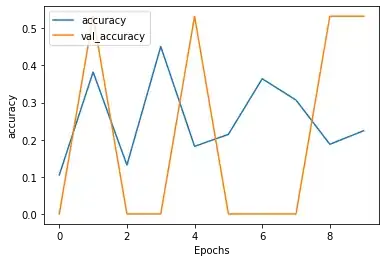

I have taken text input then converted to a sequence of values and fed it to LSTM model where my loss is not reducing and accuracy is abnormal.

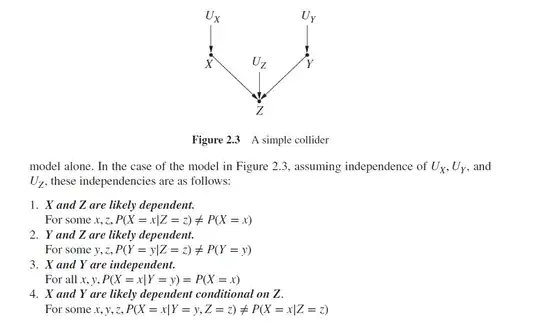

The above image is about training and validation accuracy. Here I have taken 10 epochs but even for 100 epochs or 1000 epochs the graph is similar.

The above image is about training and validation loss, there is no change in it. No reduction in loss.

How can I make my model learn.

The considered learning rate is 0.05

Model Architecture is

model = tf.keras.Sequential([tf.keras.layers.Embedding(vocab_size, embedding_dim),tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(embedding_dim,dropout=0.3)),tf.keras.layers.Dense(embedding_dim, activation='relu'), tf.keras.layers.Dense(2, activation='softmax')])