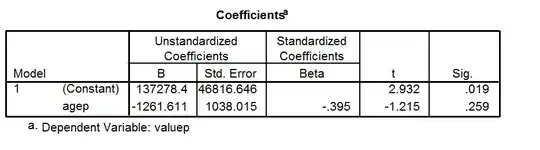

I am very new to statistical learning (I'm a graduate student in experimental biology with very little exposure to math or statistics) and I'm working my way through Introduction to Statistical Learning to acquire an intuitive understanding of machine learning while slowly breaking down each equation in Elements of Statistical Learning.

I'm currently going equation (2.2) where the predicted Y is the inner product between X^T and estimated coefficient:

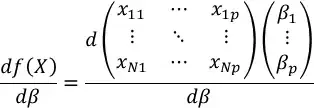

I am attempting to prove that the gradient of this equation is equal to:

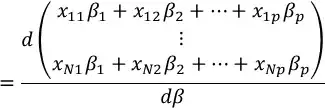

Since I'm still learning linear algebra, I decided to explicitly find the inner product of the transposed matrix and vector of coefficients:

I ended up with a Beta^Nxp matrix where each row is the vector Beta. I realize that my fundamentals are very weak and I am going through linear algebra and multivariate calculus courses but I would appreciate any help with this calculation!