This answer assumes that you have independent observations. If this is not the case (i.e. if you have repeated measurements, clustered data etc.) a multiple linear regression model is not appropriate since it does not take this dependence into account.

What is the need/usefulness to have age squared in the regression?

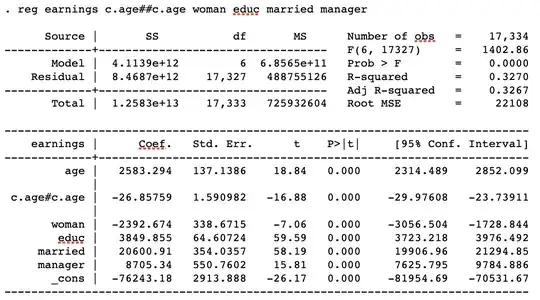

You have fitted the following multiple linear regression model:

$$\text{Earnings}_{i}=\beta_{0} + \beta_{1}\text{age}_i + \beta_{2}\text{age}{_i}^{2} + \beta_{3}\text{edu}_{i} + \beta_{4}\text{sex}_{i} + \beta_{5}\text{married}_{i} + \beta_{6}\text{manager}_{i} + \epsilon_{i},$$

where $\epsilon_{i} \sim \text{N}(0, \sigma^2)$.

Hence you assume that there is a nonlinear relationship between earnings and age. In fact, holdning all other explanatory variables constant, you assume that this relationship follows the graph of a second degree polynomial (i.e. a parabola). Since the coefficient of the $\text{age}_{i}^{2}$ is negative, $\beta_{2}=-0.006$, the earnings will increase with age until a given age (the top point or vertex of the parabola), and decrease here after. If, however, you believe that the relationship is linear you could leave out the $\text{age}_{i}^{2}$-term of the model. This would mean that you assume that the difference in earnings between two persons is proportional to their age difference.

Also, is this the easiest/correct way to calculate the diff in earnings from age=35 to age=36?

The short answer is yes. You have calculated the education, sex, marriage and managerial postion adjusted difference between two persons aged 35 and 36 respectively. That is you have calculated the difference in earnings between two persons, A and B, who has the same years of schooling, the same sex, the same marital status and the same managerial postion but person A is 36 years old and person B is 35 years old. If, instead, you were interested in the difference between a man aged 36 and a woman aged 35 you should also take the coefficient of $\text{sex}$ into account.

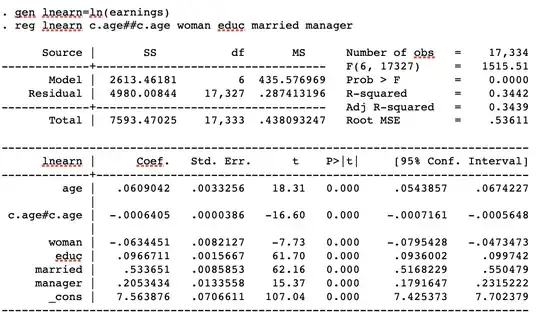

R squared is higher for the second regression and I'm struggling to understand why?

$R^2$ depends on, among other things, the residual standard deviation $\sigma$, which is a measure of how far your observations are from the fitted values of the regression model on average. The smaller $\sigma$ is, the higher $R^2$ becomes. Hence, if using $\ln(\text{earnings})$ as the dependendent variable makes $\sigma$ smaller (as compared to the when you use $\text{earnings}$ as the dependent variable), this will make $R^2$ bigger.