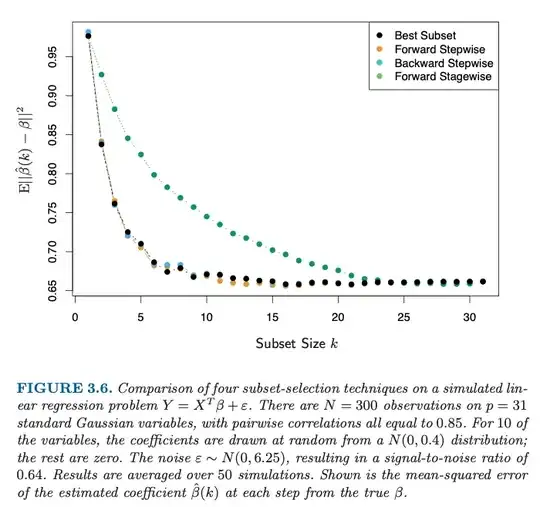

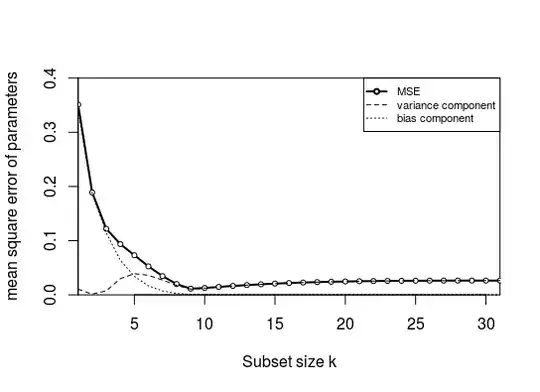

adding more variables to a linear model doesn't imply better estimates of the true parameters

This is not just estimating variables, but also variable selection. When you only subselect <10 variables, then you are inevitably gonna make an error.

That is why the error decreases when you are choosing a larger size for the subset. Because more coefficients, which are likely coefficients from the true model, are being estimated (instead of left equal to zero).

The decrease of the error goes a bit further than $k=10$ because of the high correlation between the variables.

The strongest improvement happens before k=10. But with $k=10$ you are not there yet, and you are gonna select occasionally the wrong coefficients from the true model.

In addition, the additional variables may have some regularizing effect.

Note that after some point, around $k=16$, the error goes up when adding more variables.

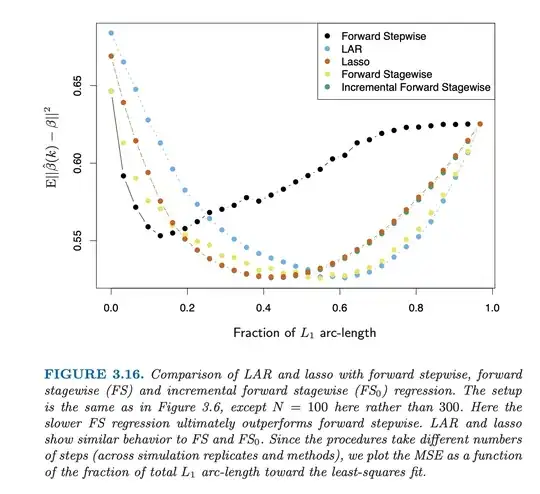

Reproduction of the graph

In the R-code at the end I am trying to reproduce the graph for the forward stepwise case. (this is also the question here: Recreating figure from Elements of Statistical Learning)

I can make the figure look similar

But, I needed to make some adjustment to the generation, using $\beta \sim N(1,0.4)$ instead of $\beta \sim N(0,0.4)$ (and still I do not get the same as the figure which starts at 0.95 and drops down to 0.65, while the MSE computed with the code here is much lower instead). Still, the shape is qualitatively the same.

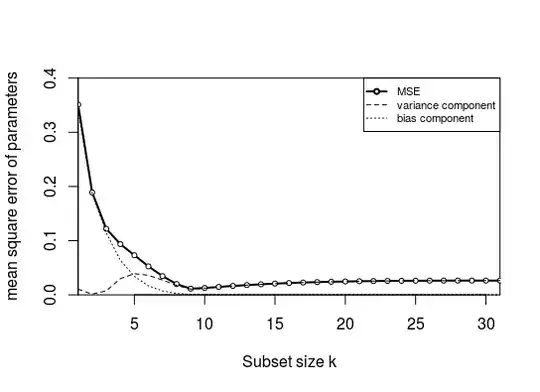

The error in this graph is not so much due to bias: I wanted to split the mean square error into bias and variance (by computing the coefficient's mean error and variance of the error). However, the bias is very low! This is due to the high correlation between the parameters. When you have a subset with only 1 parameter, then the selected parameter in that subset will compensate for the missing parameters (it can do so because it is highly correlated). The amount that the other parameters are too low will be more or less the amount that the selected parameter will be too high. So on average a parameter will be more or less as much too high as too low.

- The graph above is made with a correlation 0.15 instead of 0.85.

- In addition, I used a fixed $X$ and $\beta$ (Otherwise the bias would average to zero, more explained about that further).

Distribution of the error of the parameter estimate

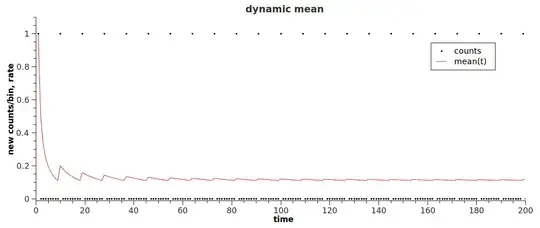

Below you see how the error in the parameter estimate $\hat\beta_1- \beta_1$ is distributed as a function of the subset size. This makes it easier to see why the change in the mean square error behaves like it does.

Note the following features

- There is a single peak for small subset sizes. This is because the parameter is often not included in the subset and the estimate $\hat\beta$ will be zero making the error $\hat\beta - \beta$ equal to $-\beta$. This peak decreases in size as the subset size increases and the probability for the parameter to be included increases.

- There is a more or less Gaussian distributed component that increases in size when the single peak decreases in size. This is the error when the parameter is included in the subset. For small subset sizes the error in this component is not centered around zero. The reason is that the parameter needs to compensate for the ommission of the other parameter (to which it is highly correlated). This makes that a computation of the bias is actually very low. It is the variance that is high.

The example above is for fixed $\beta$ and $X$. If you would change the $\beta$ for each simulation then the bias would be every time different. If you then compute the bias as $\mathbb{E}(\hat \beta - \beta)$ then you get very close to zero.

library(MASS)

### function to do stepforward regression

### adding variables with best increase in RSS

stepforward <- function(Y,X, intercept) {

kl <- length(X[1,]) ### number of columns

inset <- c()

outset <- 1:kl

best_RSS <- sum(Y^2)

### outer loop increasing subset size

for (k in 1:kl) {

beststep_RSS <- best_RSS ### RSS to beat

beststep_par <- 0

### inner looping trying all variables that can be added

for (par in outset) {

### create a subset to test

step_set <- c(inset,par)

step_data <- data.frame(Y=Y,X=X[,step_set])

### perform model with subset

if (intercept) {

step_mod <- lm(Y ~ . + 1, data = step_data)

}

else {

step_mod <- lm(Y ~ . + 0, data = step_data)

}

step_RSS <- sum(step_mod$residuals^2)

### compare if it is an improvement

if (step_RSS <= beststep_RSS) {

beststep_RSS <- step_RSS

beststep_par <- par

}

}

bestRSS <- beststep_RSS

inset <- c(inset,beststep_par)

outset[-which(outset == beststep_par)]

}

return(inset)

}

get_error <- function(X = NULL, beta = NULL, intercept = 0) {

### 31 random X variables, standard normal

if (is.null(X)) {

X <- mvrnorm(300,rep(0,31), M)

}

### 10 random beta coefficients 21 zero coefficients

if (is.null(beta)) {

beta <- c(rnorm(10,1,0.4^0.5),rep(0,21))

}

### Y with added noise

Y <- (X %*% beta) + rnorm(300,0,6.25^0.5)

### get step order

step_order <- stepforward(Y,X, intercept)

### error computation

l <- 10

error <- matrix(rep(0,31*31),31) ### this variable will store error for 31 submodel sizes

for (l in 1:31) {

### subdata

Z <- X[,step_order[1:l]]

sub_data <- data.frame(Y=Y,Z=Z)

### compute model

if (intercept) {

sub_mod <- lm(Y ~ . + 1, data = sub_data)

}

else {

sub_mod <- lm(Y ~ . + 0, data = sub_data)

}

### compute error in coefficients

coef <- rep(0,31)

if (intercept) {

coef[step_order[1:l]] <- sub_mod$coefficients[-1]

}

else {

coef[step_order[1:l]] <- sub_mod$coefficients[]

}

error[l,] <- (coef - beta)

}

return(error)

}

### correlation matrix for X

M <- matrix(rep(0.15,31^2),31)

for (i in 1:31) {

M[i,i] = 1

}

### perform 50 times the model

set.seed(1)

X <- mvrnorm(300,rep(0,31), M)

beta <- c(rnorm(10,1,0.4^0.5),rep(0,21))

nrep <- 500

me <- replicate(nrep,get_error(X,beta, intercept = 1)) ### this line uses fixed X and beta

###me <- replicate(nrep,get_error(X,beta, intercept = 1)) ### this line uses random X and fixed beta

###me <- replicate(nrep,get_error(X,beta, intercept = 1)) ### random X and beta each replicate

### storage for error statistics per coefficient and per k

mean_error <- matrix(rep(0,31^2),31)

mean_MSE <- matrix(rep(0,31^2),31)

mean_var <- matrix(rep(0,31^2),31)

### compute error statistics

### MSE, and bias + variance for each coefficient seperately

### k relates to the subset size

### i refers to the coefficient

### averaging is done over the multiple simulations

for (i in 1:31) {

mean_error[i,] <- sapply(1:31, FUN = function(k) mean(me[k,i,]))

mean_MSE[i,] <- sapply(1:31, FUN = function(k) mean(me[k,i,]^2))

mean_var[i,] <- mean_MSE[i,] - mean_error[i,]^2

}

### plotting curves

### colMeans averages over the multiple coefficients

layout(matrix(1))

plot(1:31,colMeans(mean_MSE[1:31,]), ylim = c(0,0.4), xlim = c(1,31), type = "l", lwd = 2,

xlab = "Subset size k", ylab = "mean square error of parameters",

xaxs = "i", yaxs = "i")

points(1:31,colMeans(mean_MSE[1:31,]), pch = 21 , col = 1, bg = 0, cex = 0.7)

lines(1:31,colMeans(mean_var[1:31,]), lty = 2)

lines(1:31,colMeans(mean_error[1:31,]^2), lty = 3)

legend(31,0.4, c("MSE", "variance component", "bias component"),

lty = c(1,2,3), lwd = c(2,1,1), pch = c(21,NA,NA), col = 1, pt.bg = 0, xjust = 1,

cex = 0.7)

### plotting histogram

layout(matrix(1:5,5))

par(mar = c(4,4,2,1))

xpar = 1

for (col in c(1,4,7,10,13)) {

hist(me[col,xpar,], breaks = seq(-7,7,0.05),

xlim = c(-1,1), ylim = c(0,500),

xlab = "", ylab = "", main=paste0("error in parameter ",xpar," for subset size ",col),

)

}