What is the state of the art knowledge on how generalization in interpolating models looks with respect to the number of parameters?

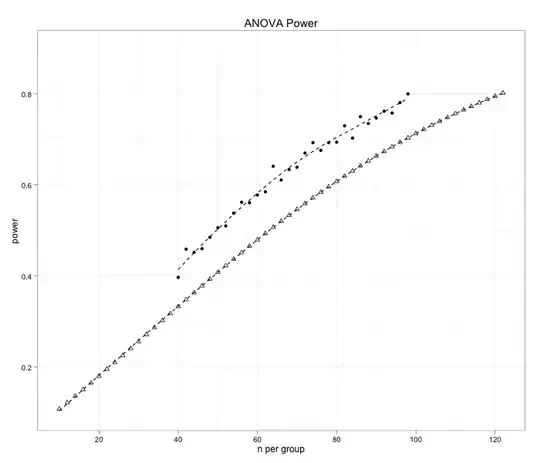

(Picture from Mikhail Belkin's talk on https://www.youtube.com/watch?v=OBCciGnOJVs&t=1185s)

In other words, can overfitting always be overcome with adding more parameters?

Let's say we don't use regularization, but train only for some natural-looking interpolation loss. I'm mainly interested in what is true for neural networks with several layers, but anything goes.