The answer above is very nice and completely answers the question but I will, instead, provide a general formula for the expected square of a sum and apply it to the specific example mentioned here.

For any set of constants $a_1, ..., a_n$ it is a fact that

$$ \left( \sum_{i=1}^{n} a_i \right)^2 = \sum_{i=1}^{n} \sum_{j=1}^{n} a_{i} a_{j} $$

this is true by the Distributive property and becomes clear when you consider what you're doing when you calculate $(a_1 + ... + a_n) \cdot (a_1 + ... + a_n)$ by hand.

Therefore, for a sample of random variables $X_1, ..., X_n$, regardless of the distributions,

$$ E \left( \left[ \sum_{i=1}^{n} X_i \right]^2 \right) = E \left( \sum_{i=1}^{n} \sum_{j=1}^{n} X_i X_j \right) = \sum_{i=1}^{n} \sum_{j=1}^{n} E(X_i X_j)$$

provided that these expectations exist.

In the example from the problem, $X_1, ..., X_n$ are iid ${\rm exponential}(\lambda)$ random variables, which tells us that $E(X_{i}) = 1/\lambda$ and ${\rm var}(X_i) = 1/\lambda^2$ for each $i$. By independence, for $i \neq j$, we have

$$E(X_i X_j) = E(X_i) \cdot E(X_j) = \frac{1}{\lambda^2}$$

There are $n^2 - n$ of these terms in the sum. When $i = j$, we have

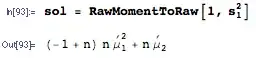

$$ E(X_i X_j) = E(X_{i}^{2}) = {\rm var}(X_{i}) + E(X_{i})^2 = \frac{2}{\lambda^2} $$

and there are $n$ of these term in the sum. Therefore, using the formula above,

$$ E \left( \sum_{i=1}^{n} X_i \right)^2

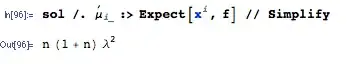

= \sum_{i=1}^{n} \sum_{j=1}^{n} E(X_i X_j) = (n^2 - n)\cdot\frac{1}{\lambda^2} + n \cdot \frac{2}{\lambda^2} = \frac{n^2 + n}{\lambda^2} $$

is your answer.