I have some questions.

In a linear model, I want to force intercept to zero.

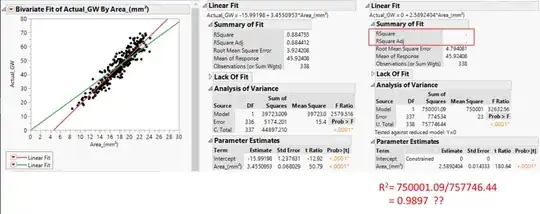

The program (I used JMP) does not provide R-squared when intercept becomes zero.

So, I calculated R-squared by myself by given SSR/SST in the program. The R-squared was higher than before forcing to zero.

Is this possible? Because linear fitting is based on minimizing error, so if I change it artificially, I think R-squared should be decreased. Also, the linear line (Green line) for intercept 0 on the graph, seems not to have 99% R-squared.

If this R-squared is not correct, how can I calculate R-squared when forcing intercept to zero?

Many thanks,