Sorry for russian labels. I have some problems with understanding the formula for the coefficient of determination. However, how can we prove that $SSR+SSE=SST$?

1 Answers

I assume that instead of a proof of the equation (as it is just the definition of the statistic), you are looking for the intuition to understand it.

It can be confusing to use the abbreviations SSE and SSR as depending on the author they can be swapped: SSE can be the explained Sum of Squares or the error Sum of Squares, while the SSR can be the residual Sum of Squares or the regression Sum of Squares.

As a preliminary note, we note that the Sum of Squares is a way to measure our errors. There are diverse ways to express or weight our errors, depending on the context (i.e. what are the kind of errors we want to avoid the most). In this case, we deal with Sum of Squares instead of just a Sum since it puts a greater weight on very large differences (see this answer for further explanation).

We know that the coefficient of determination is generally understood as the proportion of variability that is explained by your model. Let's see the equation itself and some equivalent formulations of it: $$R^2 = 1 - \frac{SS_{residual}}{SS_{total}} = \frac{SS_{total} - SS_{residual}}{SS_{total}} = \frac{SS_{explained}}{SS_{total}}$$

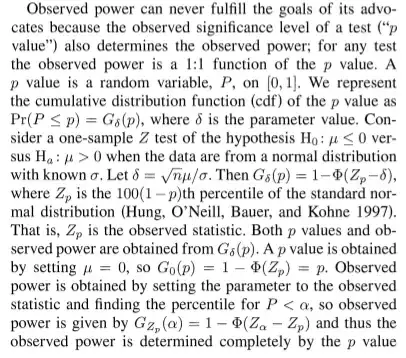

Conceptually we can see that we have defined a quantity to be the total of our squared errors, $SS_{total}$. But in theory, we can be as wrong as we want in trying to predict an observed value $Y_i$ (you can put your predicted value as off-chart as you would want to!). From the image in your question, you can see that we are defining a baseline in how wrong we can be in our prediction, by predicting with the mean of the response variable, $\bar{Y}$. So the $SS_{total}$ will be defined as the total sum of errors from predicting with the mean response.

Then we have two remaining segments from the mean until our observed $Y_i$ value. These distances or errors from the observed value will each get a name according to their origin. In your graph, you have the $SS_{regression}$ (or $SS_{explained}$), which is what is explained from your regression model, the errors that are explained away when we use $\hat{Y_i}$ instead of $\bar{Y_i}$ to predict. This is the quantity that we want our coefficient of determination to measure.

The remaining segment is the $SS_{error}$ (or $SS_{residual}$), the variation that is not captured by either our model or our mean value. Pure unexplained error, and what we assume to be noise. This is the distance between the real observed value $Y_i$ and our regression fitted value $\hat{Y_i}$.

So, to wrap this up, $SSR + SSE = SST$ comes from this subdivision: the variability captured by our regression model plus the one that cannot be captured by it, which sums the total error (from using our most basic model, the mean value).

- 1,013

- 5

- 18

-

Thanks.It became much more clearer for me.I agree that this equation looks obvious and I understand that length of the segment(between data point and the worst regression model) is the sum of two small ones . But the square signs really confuse me.I understand that this is the variance,but I do not understand how it will look on this plot.And will this equation will be the same with squares? – TImur Nazarov Oct 29 '20 at 14:20

-

Ah, I understand the issue now. I'll edit an extra paragraph explaining the origin of the squaring. – Kuku Oct 29 '20 at 15:31