I am trying to run a binary classification problem on people with diabetes and non-diabetes.

For labeling my datasets, I followed a simple rule. If a person has T2DM in his medical records, we label him as positive cases (diabetes) and if he doesn't have T2DM, we label him as Non-T2DM.

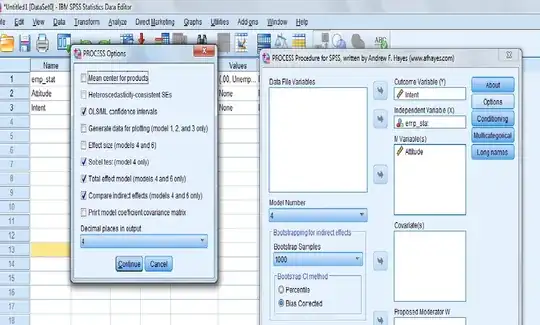

Since there are a lot of data points for each subject, meaning he has a lot of lab measurements, a lot of drugs taken, a lot of diagnoses recorded, etc, I ended up with 1370 features for each patient.

In my training, I have 2475 patients and in my testing, I have 2475 patients. (I already tried 70:30. Now am trying 50:50 still the same result (as 70:30))

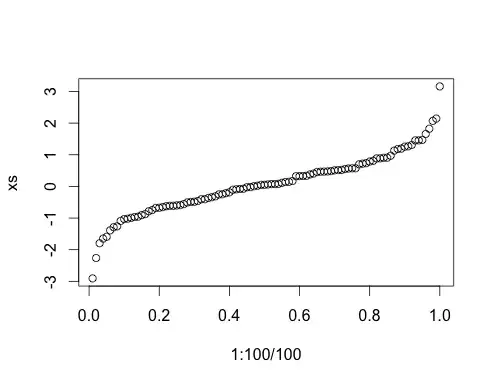

My results are too good to be true as shown below

a) Should I reduce the number of features?

b) Is it overfitting?

c) Should I retain only the top features like top 20 features, top 10 features etc?

d) can help me understand why is this happening?