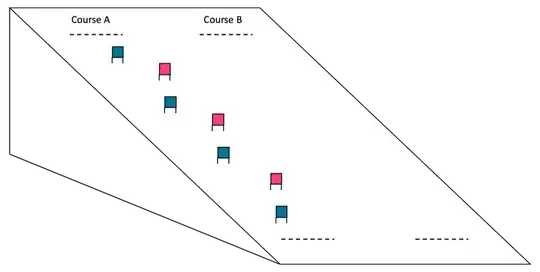

We are currently performing a large learning experiment on alpine skiing. In this experiment, we test skiers on three different slalom courses. We compute performance by calculating the average of the two best runs for each of the three courses and divide it by the time they use to ski the hill straight down ('straight gliding'). I have added this figure for illustration:

Doing so, we see that good skier have a negative ratio score, meaning that they are faster when they ski a slalom course with turns. That’s cool! After this, we randomly stratify the skiers into two groups. One group of skiers will ski all the three courses on a single day, in random order. The other group will only ski one course per day. Three days after this training block, we test the skiers again in the same three courses to compare their learning. To this end, we are going to fit an ANCOVA model. My concern, however, is that we can (in theory) observe negative learning from pre- to post-test because of different snow conditions. I don’t think we ever will but I just started to think if it would be better to normalize the data to better able to compare performance across different days. Does this sound reasonable? If so, what can I do?