I am training a CNN model which takes input of 128x128x3 color images and is trained to predict the coordinates of 4 landmarks on it (i.e, there are 2 * 4 = 8 values to be predicted if we count x and y coordinates separately. All of the coordinate values are scaled in [-1, 1] Following is my model class:

class LandmarkLocalizerCNN:

def __init__(self, input_shape, landmark_point_count):

self.input_shape = input_shape

self.landmark_point_count = landmark_point_count

def first_level_cnn(self):

model = models.Sequential()

model.add(layers.Conv2D(8, (5, 5), padding = 'same',activation = 'relu', input_shape = self.input_shape))

model.add(layers.Conv2D(8, (5, 5), padding = 'same',activation = 'relu', input_shape = self.input_shape))

model.add(layers.Conv2D(16, (5, 5), padding = 'same',activation = 'relu', input_shape = self.input_shape))

model.add(layers.Conv2D(16, (5, 5), padding = 'same',activation = 'relu', input_shape = self.input_shape))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(32, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.Conv2D(32, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.Conv2D(32, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.Conv2D(32, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.Conv2D(64, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.Conv2D(64, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.Conv2D(64, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.Conv2D(128, (3, 3), padding = 'same', activation = 'relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation = 'relu'))

model.add(layers.Dense(32, activation = 'relu'))

model.add(layers.Dropout(0.2))

model.add(layers.Dense(16, activation = 'relu'))

model.add(layers.Dense(16, activation = 'relu'))

model.add(layers.Dropout(0.2))

model.add(layers.Dense(16, activation = 'relu'))

model.add(layers.Dense(16, activation = 'relu'))

model.add(layers.Dense(2 * self.landmark_point_count))

return model

And here is the created model:

first_level_cnn_model = LandmarkLocalizerCNN(input_shape = (128, 128, 3), landmark_point_count = 4).first_level_cnn()

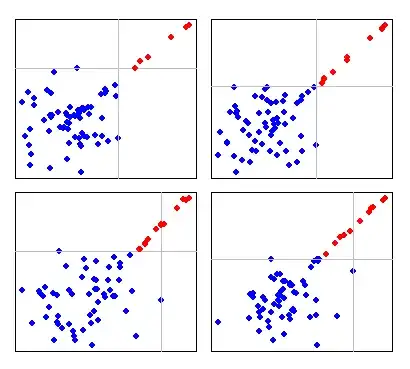

After training for 375 steps I got the metrics as following:

Here are some of my observations:

- If I remove dropouts the training accuracy goes higher (

around 0.9) quickly even with lesser number of layers. - With dropout layers training accuracy doesn't go that higher but in either cases validation accuracy hardly crosses 0.6

- In this current architecture the graph shows fluctuations in both training and validation plots with some strange behaviors. For training, the accuracy and loss seems to be negatively correlated but for validation they seem to be positively correlated. But finally both of them saturated and no more improvements.

So what the main problem my model is suffering from. I tried increasing and decreasing the number of layers and also adding or removing dropouts but they only help changing the training accuracy. But the validation accuracy hardly crosses 0.6 whatever I do, so how can I make my model to perform better in unknown cases?