Simulation

I have been trying some bit of modeling to see how the leave-one-out estimators converge. In my simulation (one-dimensional, but I don't believe that matters), I get that they are strongly correlated (ie there is not much variance between different $-i$).

When the $n$ get's large then the values of $$\underset{x\in J}{\sup} |\widehat{f}_{-i}(x)-f(x)|$$ are very similar for different values of $i$.

This makes sense, leaving one $i$ out versus another $i$ is not much effect. I wonder whether something is missing?

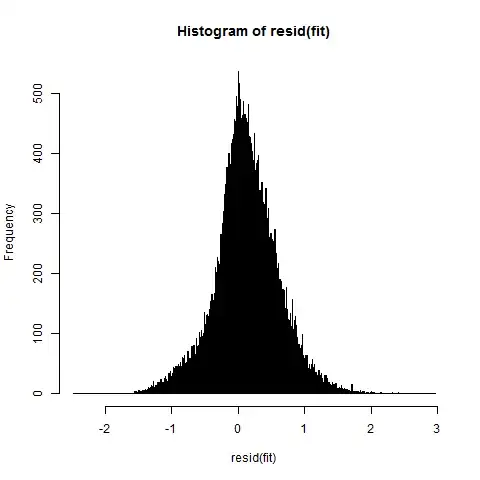

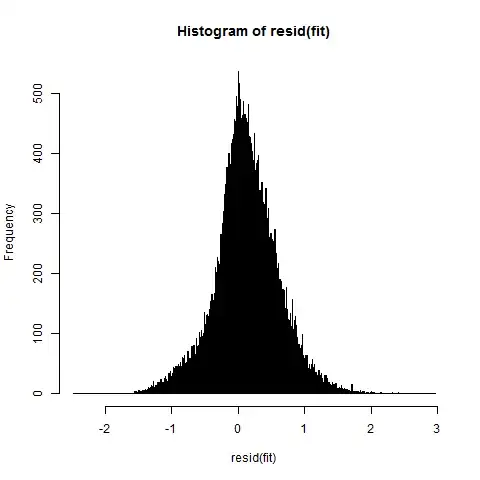

The simulation below is just a quick plot of some errors computed for different $n$ with different $i$, and I guess that the $\mathcal{o}_P(a_n)$ relates to the variance which is not exactly the same, but I guess that the plot shows that the different $i$ are not so different from each other and the averaging won't be having such a big effect for large $n$.

# sample size

ns <- 1000

# kernel estimator

f_hat <- function(x, i, obsf,obsx) {

### some function for the bandwith

h <- 1/length(obsf)

### distance from the sample point

d <- x-obsx

### Gaussian as kernel function

K <- dnorm(d,mean=0,sd=h)*obsf

## an average over the kernel functions

f <- mean(K[-i])

return(f)

}

f_hat <- Vectorize(f_hat, vectorize.args = 'x')

# some function to be estimated

f <- function(x) {

sin(x*10)+sin(x*2)

}

# the set of points to estimate

x <- seq(0,1,0.01)

ni <- lenght(x)

z <- f(x)

# the data

xs <- runif(ns)

fs <- f(xs)+rnorm(ns,0,0.1)

### how the estimation looks like

plot(x,z, type = "l", lwd = 2)

points(xs,fs, pch = 21, col = 1, bg = 1, cex = 0.1)

lines(x,f_hat(x,1,fs,xs), col = 2, lty = 2, lwd = 2)

### repeating for many different sample sizes

nrange <- floor(2^c(seq(6.5,16,0.25)))

err <- matrix(rep(0,length(nrange)*90),length(nrange))

j = 0

for (ns in nrange) {

j=j+1

xs <- runif(ns)

fs <- f(xs)+rnorm(ns,0,0.1)

for (i in 1:90) {

### the maximum error for the points x

### computed for 90 different i

err[j,i] <- max(abs(f_hat(x,i,fs,xs)-f(x)))

}

}

plot(-1,-1, log = "xy", xlim = range(nrange), ylim = range(err),

xlab = "n", ylab = "error size")

for (i in 1:10) {

lines(nrange,err[,i],col = rgb(0,0,0,0.3))

}

[![simultion][1]][1]

Intuition

At first, I thought that maybe the different $i$ have large differences such that the averaging procedure is reducing the variance/error by diluting the probability of selecting a 'bad' $i$.

But with this plot I guess that, either I misunderstand the concept, or the question is missing some details that should make the error values for the leave on out estimators more different for different $i$.

The idea that the variance of an average can converge faster than the variance of the elements is not strange.

Say you have

$$S = \frac{1}{n} \sum_{i=1}^n X_{i,n} $$

Where $X_{i,n}$ are independent random variables (and with the same mean) with $\text{Var}(X_{i,n}) \in \mathcal{o}(f(n))$. Then $\text{Var}(S) \in \mathcal{o}(f(n)/\sqrt{n})$.

I am not sure whether this is exactly behind $\mathcal{o}_p({a_n})$ term. Whether it is about the convergence of the variance of the error term, ie. the difference with respect to it's expected value. Or whether it is about the convergence of the mean square error, ie. the difference with respect to zero.