(This used to be an edit to the original message, but a comment requested to post this as an answer instead, so here we are)

F-Test with discounted Sample Sizes

The autocorrelation violates the iid (to be precise the first i ;-) assumption of an F-test. Consequently, the autocorrelation leads to higher variability of estimated statistics of the series, i.e. sample mean, sample variance etc. Or in other words, the information present in correlated samples about an estimated statistic is less than in uncorrelated samples.

O’Shaughnessy and Cavanaugh (2015) propose a method to perform t-tests for autocorrelated time series data by simply discounting the sample sizes. For large enough sample size $n$ (they say $n > 50$ is usually enough) the discounted sample size $n_e$ is:

$$n_e = n\frac{1-\hat{\rho}_1}{1+\hat{\rho}_1}$$

where $\hat{\rho}_1$ is the auto-correlation of time shift 1:

$$ \hat{\rho}_1 = \frac{\sum_{t=1}^{n-1} (y_t - \bar{y})(y_{t+1} - \bar{y})}{\sum_{t=1}^n (y_t - \bar{y})^2}$$

Can I do the same discounting for the F-test? What I mean is to calculate the F-test statistic as usual with the given sample sizes $n_1$ and $n_2$ of my time series below. Yet, when determining the critical p-value, I use the discounted sample sizes:

$$ p = P(F(n_{e1} - 1, n_{e2} - 1 ) \geq F_{\text{calculated}}| H_0)$$

Consequently, I have a much more conservative requirement, i.e. the variance reduction must be much larger compared to iid samples to show significance. Is this a statistically sound approach?

Update: Confidence Intervals with Block-Bootstrapping

In case I do block-bootstrapping (either non-overlapping or moving, they both yield pretty much the very same stats) with a sufficiently long block length, I get pretty much the same answer as using the discounted F-test above. For my data the discounted F-test is significant for p < 0.01. For block bootstrapping it's significant for p<0.025. I guess this is the expected price to pay for using a model free approach in comparison to a Gaussianity assuming F-test.

For anyone who is interested, here are the details of the block bootstrapping approach (based on this blogpost):

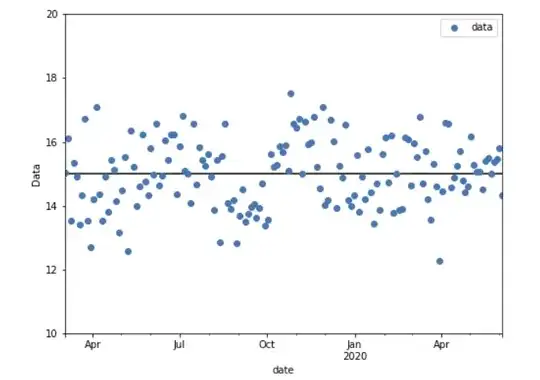

I look at the autocorrelation function to pick a sufficiently large block length.

I create $n$ moving blocks of length $k$ from both time series. E.g. if

$$y_0, y_1, y_2, ..., y_{n-1} $$

is one of my time series I create blocks of length $k$ as

block 1: $y_0, ..., y_{k}$

block 2: $y_1, ..., y_{k+1}$ ... block n: $y_{n-1}, y_0, ... y_{k-1}$

Note to avoid the bias of less often sampling the beginning or end of the time series, I wrap both time series around to have a circular array.

I take a lot of bootstrap samples of $m = \text{round}(y / k)$ blocks with replacement. I stitch them back together to create a lot of bootstrapped time series.

I compute for each bootstrapped series the variance. I look at the 2.5 and 97.5 percentile of the resulting variance distribution of bootstrapping both of my initial time series and check if they overlap. They don't, so there's a significant difference.