When doing a Principal Component Analysis, how do I choose if the correlation between the original variables and the given principal component is significant? I read that you just look for the highest absolute coefficient values, but at which point are these values considered high or low. I want to justify why I think the correlations are significant when I interpret the PCs in my master thesis. I could not find anything in the literature about a threshold value.

-

2"Significance," to make any sense, requires you to specify a probability model for the data. What are you assuming? Usually PCA is applied as an *exploratory* tool, where, loosely speaking, the idea of "significance" is supplanted by detecting useful or interesting patterns. There's a good bit of literature on that, starting with recommendations to look for an elbow in the scree plot or to accept all eigenvalues greater than 1, followed by various reactions to those simplistic rules. – whuber Sep 08 '20 at 14:46

-

I think significant was not the right word choice, but rather important for interpretation of the PC. For example, if I say that original variables whose weights for a given PC are above 0.5 are strongly correlated with that PC, how would i justify selecting 0.5? I thought the Kaiser Rule and the scree plot are tools for selecting the number of PCs to keep? – Delloman Sep 08 '20 at 15:27

-

Please read also this https://stats.stackexchange.com/a/143949/3277. The loadings are the correlations you are speaking of. Note that their squares are "contributions". – ttnphns Sep 12 '20 at 15:34

-

Thanks for this reference. I did a correlation based PCA. If I understand this right I will rarely rescaled loadings squared around 1, due to the fact the loadings are smaller than 1. This means only in cases where a loading is around 0.9 or above is the squared loading around 1 and you can say that the PC defines the variable almost alone. Did I get it right? And both the horizontal and vertical squared sums of entries in the loading matrix amount to 1 in my case. Is this due to using rescaled loadings? – Delloman Sep 12 '20 at 17:46

1 Answers

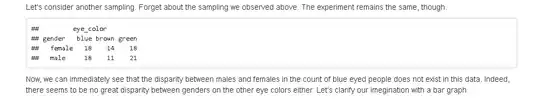

The correlations you refer to are used to determine which variables are important for interpreting a PC. While "significance" is not quite the right concept, "relative importance" is more easily addressed. You can easily compare which individual variables have more importance in a PC, as well as which pairs of variables (sums or differences) by using a variable pairs heat map.

The following graph shows the variable pairs heatmap for interpreting the first baseline PC using the epi.bfi data example from the psych package in R. The diagonal shows absolute correlations between the individual variables and the PC; the upper triangle shows absolute correlations between the differences $Z_i − Z_j$ and the PC; the lower triangle shows absolute correlations between the sums $Z_i + Z_j$ and the PC.

In the figure, you can see that even though $PC_1$ is not highly correlated with the variable bdi, which measures depression, it is very highly correlated with the bdi+ traitanx summate (r > 0.90), traitanx measuring anxiety. Thus, the first PC measures something that is closely related to a "depression with anxiety" scale, where people who are depressed with high anxiety are at one end of the scale, while not depressed people with low anxiety are at the opposite end.

Source: Westfall PH, Arias AL, Fulton LV. Teaching Principal Components Using Correlations. Multivariate Behav Res. 2017;52(5):648-660. doi:10.1080/00273171.2017.1340824

- 51,648

- 40

- 253

- 462

- 4,593

- 12

- 22

-

1This is an great answer. Just two questions. Do _Zi and Z_j denote two different variables and how do I calculate the sums and the differences between the individual variables? I used Python and Scikit-learn to calculate the absolute correlations between each variable and each PC, but I do not know how to obtain the correlations between the PCs and the sums and differences of pair of variables? – Delloman Sep 12 '20 at 14:23

-

-

This is a helpful answer. As per @ttnphns could we get confirmation of the $Z$, is it rescaling min-max normalization, where you subtract the min and divide by the range? Also what function generates the plot? – Single Malt Sep 12 '20 at 15:47

-

1To answer all, the $Z_i$ are the usual z-scores that the principal component scores are constructed from. (Subtract the variable's mean, then divide by the variable's standard deviation.) Once you calculate those, you can just use the ordinary correlation function. – BigBendRegion Sep 12 '20 at 19:01