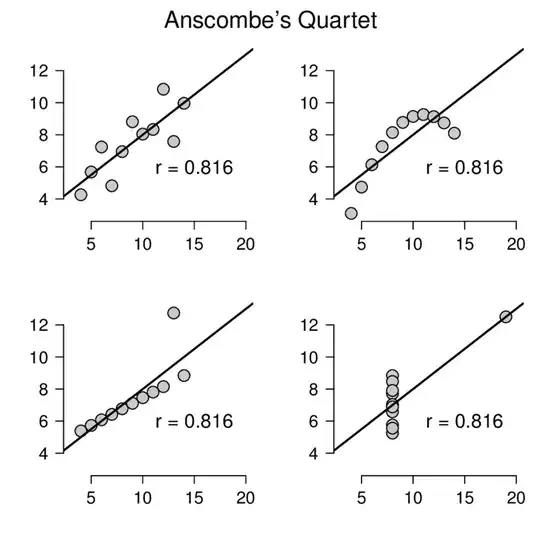

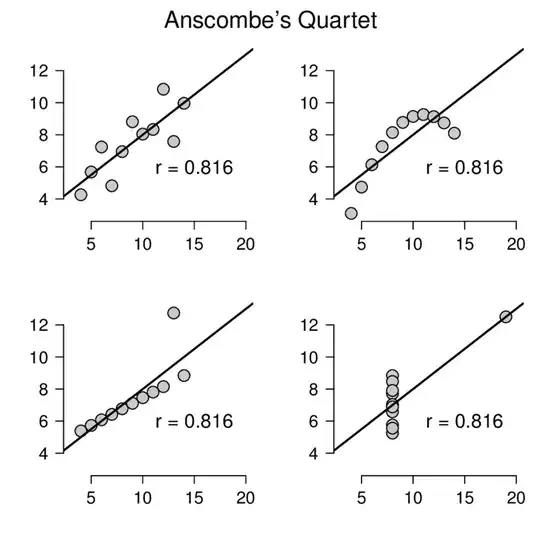

It is very difficult to achieve what you want programmatically because there are so many different forms of nonlinear associations. Even looking at correlation or regression coefficients will not really help. It is always good to refer back to Anscombe's quartet when thinking about problems like this:

Obviously the association between the two variables is completely different in each plot, but each has exactly the same correlation coefficient.

If you know a priori what the possible non-linear relations could be, then you could fit a series of nonlinear models and compare the goodness of fit. But if you don't know what the possible non-linear relations could be, then I can't see how it can be done robustly without visually inspecting the data. Cubic splines could be one possibility but then it may not cope well with logarithmic, exponential and sinusoidal associations, and could be prone to overfitting. EDIT: After some further thought, another approach would be to fit a generalised additive model (GAM) which would provide good insight for many nonlinear associations, but probably not sinusoidal ones.

Truly, the best way to do what you want is visually. We can see instantly what the relations are in the plots above, but any programmatic approach such as regression is bound to have situations where it fails miserably.

So, my suggestion, if you really need to do this is to use a classifier based on the image of the bivariate plot.

create a dataset using randomly generated data for one variable, from a randomly chosen distribution.

Generate the other variable with a linear association (with random slope) and add some random noise. Then choose at random a nonlinear association and create a new set of values for the other variable. You may want to include purely random associations in this group.

Create two bivariate plots, one linear the other nonlinear from the data simulated in 1) and 2). Normalise the data first.

Repeat the above steps millions of times, or as many times as your time scale will allow

Create a classifier, train, test and validate it, to classify linear vs nonlinear images.

For your actual use case, if you have a different sample size to your simulated data then sample or re-sample to get obtain the same size. Normalise the data, create the image and apply the classifier to it.

I realise that this is probably not the kind of answer you want, but I cannot think of a robust way to do this with regression or other model-based approach.

EDIT: I hope no one is taking this too seriously. My point here is that, in a situation with bivariate data, we should always plot the data. Trying to do anything programatically, whether it is a GAM, cubic splines or a vast machine learning approach is basically allowing the analyst to not think, which is a very dangerous thing.

Please always plot your data.