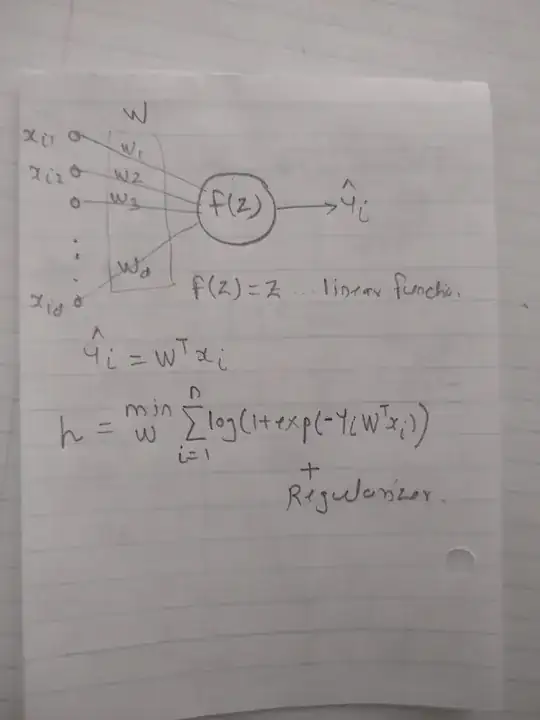

If by following way single perceptron is made to work like Logistic Regression.

How much correct is it to say that I made perceptron to work as Logistic Regression.

Question came to mind as activation function of perceptron is a step function and learning is not done by back propagation. https://en.wikipedia.org/wiki/Perceptron

Also as the activation function is changed and also back propagation is considered to be done then is it still a perceptron?