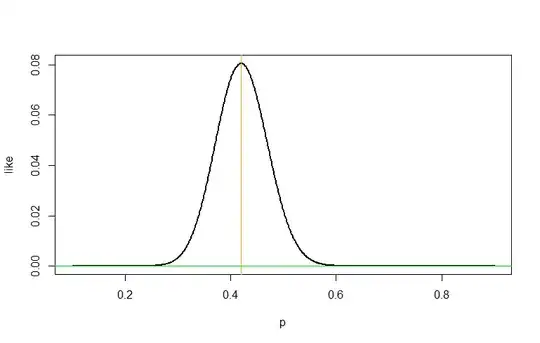

I have always been convinced that maximum likelihood estimation is a very nice method to estimate the parameter of a probability distribution, since the first day I learn about it. However, when I was thinking about the intuition behind Fisher information, I ran into this What kind of information is Fisher information? and found myself a little bit confused. It says we can think Fisher information of a way to measure the curvature of the log likelihood, if we write it as $$ \begin{aligned} I(\theta^{\star})&=-[\mathbb{E}_{X\sim\theta^{\star}}\frac{\partial^2}{\partial\theta^2}\log f(X;\theta)]\mid_{\theta=\theta^{\star}} \end{aligned} $$ Adding some conditions, we may interchange integration and differentiation to get $$ \begin{aligned} I(\theta^{\star})&=-[\frac{\partial^2}{\partial\theta^2}\mathbb{E}_{X\sim\theta^{\star}}\log f(X;\theta)]\mid_{\theta=\theta^{\star}} \end{aligned} $$ Then we can interpret $I(\theta^{\star})$ as the curvature of the expected log likelihood around $\theta^{\star}$ when data actually follows $\theta^{\star}$.

So here comes my question: is the expected log likelihood $\mathbb{E}_{X\sim\theta^{\star}}\log f(X;\theta)$ (a function of $\theta$) maximized at $\theta=\theta^{\star}$? If not, I think it may weaken the "reasonability" of MLE. Is this just my needless worry? Or it's just that I get something wrong about Fisher information.