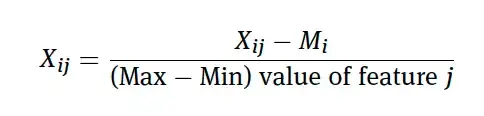

As part of the data pre-processing in the paper Cloud Security: LKM and Optimal Fuzzy

System for Intrusion Detection in Cloud

Environment numeric attributes were normalized using the following equation.

Where:

- Xij is a value to be normalised

- Mi is the mean value of the feature

- Max, Min are maximum and minimum values of the feature respectively.

From what I understand, there is a difference between standardization and scaling, and the equation before represents neither. Standardization as I know requires dividing by the standard deviation. while Min-Max scaling requires subtracting the minimum value before diving by the value range. As explained here

As I couldn't find any source mentioning the previous equation, I don't understand if it is simply wrong or it has some specific advantages