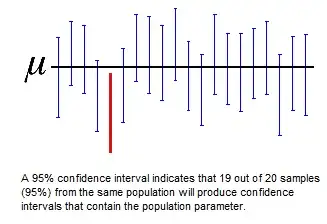

My second question is, say you have one of these 95 confidence intervals. Aside from using 95% to get the 1.96 Z-score, how else is the 95% manifested in this confidence interval?

The 95% is manifested in the following way

Probability statement: "95% confidence interval means there is a 95% chance that the true parameter falls in this range"

There are misconceptions about this statement, but the statement itselve is not a misconception.

Whether or not the probability statement is true depends on how you condition the probability. It depends on the interpretation of what is meant by probability/chance.

You can express this probability 'the interval containing the true parameter' conditional on the observation but also conditional on the true parameter.

So yes, the probability statement is wrong if the probability is wrongly interpreted.

But no, the probability statement is not wrong if the probability is correctly interpreted.

Example

from https://stats.stackexchange.com/a/481937/ and https://stats.stackexchange.com/a/444020/

Say we measure $X$ in order to determine/estimate $\theta$

$$X \sim N(\theta,1) \quad \text{where} \quad \theta \sim N(0,\tau^2)$$

Here $\theta$ follows a distribution as well. (You can imagine for instance that $\theta$ is some measure of intelligence which differs from person to person where $N(0,\tau^2)$ is the distribution of $\theta$ among all persons. And $X$ is the result from some intelligence test).

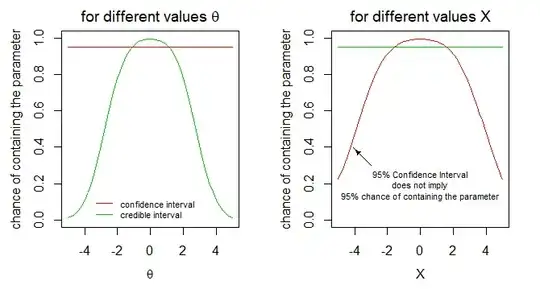

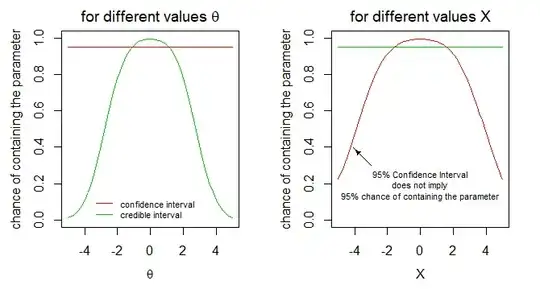

Below is a simulation of 20k cases.

In the image we draw lines for the borders of a 95% confidence interval (red) and a 95% credible interval (green) as a function of the observation $X$. (For more details about the computation of those borders see the reference)

We can consider 'the probability of an interval containing the true parameter' as conditional on the observation $X$ or conditional on the true parameter $\theta$. We have plotted the two different interpretations for both type of intervals. Only in the right one (conditional on $X$), the probability statement about the confidence interval is false.

.

.

Practical situation that explains the relevance of this view: Say in the above example, which could be about an intelligence test, we have done the test in order to select people with high intelligence, and we have a sub sample of people that tested with a specific range of test observation $X$ (e.g. we selected candidates with a high intelligence for some job). Now we may wonder for how many of these people their 95% confidence interval contains the true parameter.... well, it won't be 95% because the confidence interval does not contain the parameter in 95% of the cases when we condition on a particular observation (or range of observations).

Misconceptions about the misconception

It is often mentioned that the probability statement is not true because after the observation the statement is either 100% true or 0% true. The parameter is either in or outside the interval and it can not be both. But we do not know which of the two cases it is, and we express a probability for our data-based certainty/uncertainty about it (based on some assumptions).

(Note that this argument about 'the probability statement being false' would work for any type of interval, but somehow because the confidence interval relates to a frequentist interpretation of probability the probability statement is disallowed.)

It is perfectly fine to speak about the probability that 'the parameter is within some interval' (Or if you feel uncomfortable with this expression then you can turn it around and say, the probability that 'the interval is around the parameter') Even if the parameter would be a constant, the interval is not a constant (but a random variable), thus it is fine to make probability statements about relations between the two. (and if this argument is still not comfortable then one could also adopt a propensity probability interpretation instead of a frequentist interpretation of probability, a frequentist interpretation is not necessary for confidence intervals)

Example: from an urn with 10 red and 10 blue balls you 'randomly' pick a ball without looking at it. Then you could say that you have 50% probability to have picked red and 50% to have picked blue. Even though in the (unknown) reality it is either 100% blue or 100% red and not really a random pick but a deterministic process.

Pedantic notes

The interval that contains the true value $\beta_i$ in 95% of all samples is given by the expression...

Is this wording correct?

- It is not the interval. There will be multiple intervals with the same property.

- More specifically it is an interval that contains the true value $\beta_i$ in 95% of all samples independent from the true value of $\beta_i$