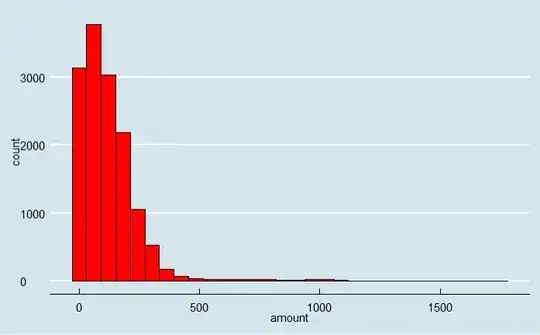

I have a positively skewed continuous data (no zeros), representing transactions by amount.

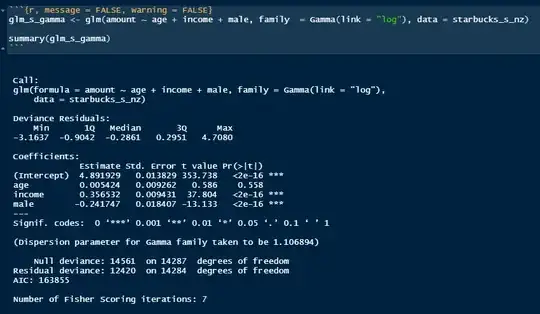

Variables age and income were standardized, amount - not.

I tried to use gamma distribution with Gamme(link = log).

I have Residual deviance: 12420 on 14284 degrees of freedom.

My residuals output:

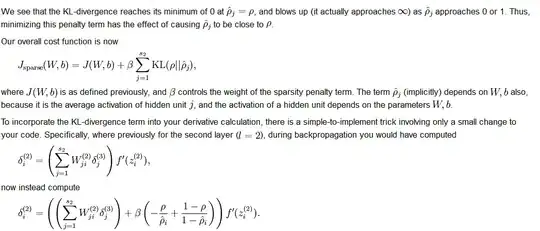

What else can I try to improve this model?

I have seen an interesting question about the same type of data and people suggested several less common models (I never heard about them) and cannot find than question now.