I have calculated a multi-level model with a biomarker as dependent variable (which was measured three time), a 5-level factor variable called „module“ as predictor (which is an intervention including a control group) and several other covariates.

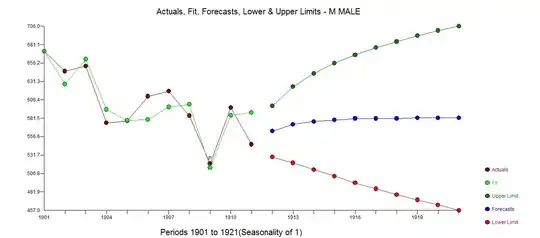

The F-Omnibus test of my multi-level model revealed a significant main effect of the factor „modules“. That’s why, I calculated post hocs, i.e. pairwise comparisons for the main effect „module“ with the package "emmeans" as well as with the "multcomp"-package in R. These show surprisingly different results (see code and results below). I already read that multcomp works with z-statistics (and not t-statistics like emmeans) and that p-values and CI-intervals are rather displayed smaller for smaller samples (<30). For larger samples (i.e. 30 persons and more), there should be no difference. In total, I have 300 persons with app. ~40 persons in each group, in the control group 120 (unbalanced study). So, I’d say I have a larger sample and would expect similar results between the two packages. Interestingly, when I look at the results of my MLM model (see below), also using T-statistics, they reveal the same significant effects as the „multcomp“ package. Further, the results oft the „multcomp“ package make also more sense in terms when I look at my raw data (see graph). I have also tried different adjustment methods for p-correction or by using no p-correction at all and the same df-method, but that reveals the same distinct results of the two packages.

Do you know why I get such different results with emmeans and multcomp package? Which one would you take for your final results?

Any help or idea is highly appreciated.

Codes:

#multcomp

summary(glht(M3_ALL, linfct = mcp(module = "Tukey")), test = adjusted("holm"))

#emmeans

emm1 = emmeans(M3_ALL, specs = pairwise ~ module)

emm1$contrasts

Results:

0 = control group

Other numbers: different interventions

#multcomp

Simultaneous Tests for General Linear Hypotheses

Multiple Comparisons of Means: Tukey Contrasts

Fit: lmer(formula = bio ~ bl2peak + peak2rec + module + bl2peak *

module + peak2rec * module + +age + hor +

(1 | id), data = data_set)

Linear Hypotheses:

Estimate Std. Error z value Pr(>|z|)

1 - 0 == 0 0.36031 0.11554 3.119 0.0164 *

2 - 0 == 0 -0.32786 0.11494 -2.852 0.0260 *

3 - 0 == 0 -0.07544 0.11623 -0.649 1.0000

4 - 0 == 0 -0.05128 0.11587 -0.443 1.0000

2 - 1 == 0 -0.68817 0.13859 -4.966 0.00000685 ***

3 - 1 == 0 -0.43575 0.13983 -3.116 0.0164 *

4 - 1 == 0 -0.41159 0.13941 -2.952 0.0221 *

3 - 2 == 0 0.25242 0.13917 1.814 0.2788

4 - 2 == 0 0.27658 0.13888 1.991 0.2322

4 - 3 == 0 0.02416 0.14013 0.172 1.0000

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(Adjusted p values reported -- holm method)

# emmeans

contrast estimate SE df t.ratio p.value

0 - 1 -0.1440 0.106 321 -1.359 0.6542

0 - 2 0.3169 0.105 323 3.029 0.0221

0 - 3 0.2048 0.106 318 1.929 0.3040

0 - 4 0.0802 0.105 317 0.760 0.9417

1 - 2 0.4609 0.127 323 3.642 0.0029

1 - 3 0.3487 0.128 320 2.725 0.0526

1 - 4 0.2241 0.127 320 1.761 0.3982

2 - 3 -0.1121 0.127 321 -0.885 0.9023

2 - 4 -0.2367 0.126 321 -1.877 0.3318

3 - 4 -0.1246 0.128 317 -0.977 0.8656

Results are averaged over the levels of: bl2peak, peak2rec, hor

Degrees-of-freedom method: kenward-roger

P value adjustment: tukey method for comparing a family of 5 estimates

# multi level model (R setting default to contr.treatment with 0/controlgroup as reference category):

Estimate Std. Error df t value Pr(>|t|)

(Intercept) 0.57833981 0.07225305 382.75265475 8.004 0.0000000000000145 ***

bl2peak 0.00348362 0.00075672 552.81449219 4.604 0.0000051566462762 ***

peak2rec -0.00384072 0.00110413 552.93007226 -3.479 0.000544 ***

module1 0.36031070 0.11553583 439.60198129 3.119 0.001937 **

module2 -0.32785914 0.11494352 450.22174699 -2.852 0.004540 **

module3 -0.07543983 0.11623406 440.63645964 -0.649 0.516655

module4 -0.05127913 0.11586632 445.20852853 -0.443 0.658291

age 0.00576536 0.00401484 278.99239058 1.436 0.152120

hor1 0.06274631 0.10814214 280.72152598 0.580 0.562231

hor2 0.48812486 0.11532236 280.23372757 4.233 0.0000313271007368 ***

hor3 0.01833652 0.07904604 278.57996999 0.232 0.816730

bl2peak:module1 0.00318217 0.00144669 551.91605778 2.200 0.028247 *

bl2peak:module2 -0.00038689 0.00144282 556.55214625 -0.268 0.788685

bl2peak:module3 0.00121872 0.00145914 551.91030700 0.835 0.403951

bl2peak:module4 0.00013595 0.00145543 552.78654470 0.093 0.925613

peak2rec:module1 -0.00501776 0.00213487 554.61415676 -2.350 0.019104 *

peak2rec:module2 -0.00007187 0.00212124 553.65862032 -0.034 0.972983

peak2rec:module3 -0.00398714 0.00211343 551.94675681 -1.887 0.059742 .

peak2rec:module4 -0.00108719 0.00210806 552.82306606 -0.516 0.606251

(converted from answer)

Thank you very much for your fast and detailed answer! It helped a lot. It was indeed the interaction effect. When I calculated the model without the interaction effect, both packages revealed the same results.

However, this command

summary(glht(mod, mcp(module = "Tukey", interaction_average = TRUE)))

did not work for me. I got this warning massage.

Warning message:

In mcp2matrix(model, linfct = linfct) :

covariate interactions found -- default contrast might be inappropriate

Changing contrasts just worked for the variable "module" of my interactions variables (interactions: bl2peak:module; peak2rec:module). The other ones (bl2peak and peak2rec) are numeric variables but only containing 0 and -70 or 0 and +47 values (I’d like them to stay numeric variables because of model caluculation). For the numeric variables, I couldn’t change the contrast setting and changing contrasts just for module didn’t lead to the disappearance of the warning message.

So I assume the different results of emmeans and multcomp in my case were not only because of the contrast settings but rather also about the numeric variable containing so many 0 values which led probably to the result of the interaction effect being 0 in multcomp package (as you have explained with both contrasts being contr.treatment above).