I am trying to learn gradient descent and in the course of so I am trying to find the optimal m and c value for my model, for $y=mx+c$

For that, I have plotted the MSE using the below code in python

#building the model

#lets start with random value of m and c

m=0

c=0

l=0.0001 #l is the learning rate

n=float(len(X_train)) # n=number of training data, we are converting it to float since we will need to divide n

mse=[]

for i in range (0,4000):

Y_pred=m*X_train+c

mse.append(numpy.sum((Y_train-Y_pred)**2)/n)

D_m= (-2/n) * sum(X_train*(Y_train-Y_pred))

D_c= (-2/n) * sum(Y_train-Y_pred)

m=m-l*D_m

c=c-l*D_c

plt.plot(mse)

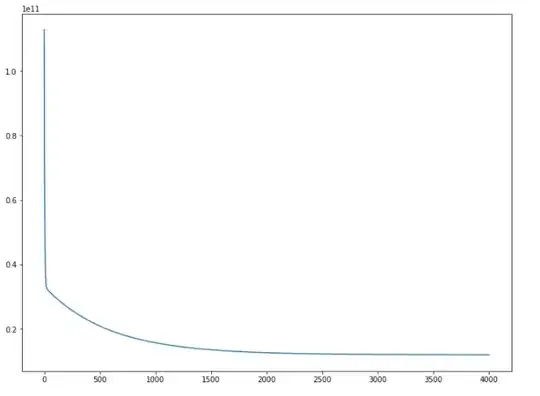

And the output I am getting for this is this-->

So it seems that the SME becomes more or less same after 2000, and remains more or less same till 4000

So I am taking m and c values that I have got in 4000th iteration. From the graph, we can see that the MSE value is lesser than 0.2.

But to my surprise when I do

mse[-1]

I get a HUGE NUMBER as Answer

The answer that I get for mse[-1] is 12041739532.188858

And for this reason, my final model performs absolutely worst, producing something like this as the output on the training set.

It will be very helpful if someone can guide me on why this is happening with the MSE value. Thank you.