Multicollinearity will only be a problem if the correlation between X and Z is 1. In that case, X and Z can be combined into a single variable which will provide an unbiased estimate. We can see this with a simple simulation

> set.seed(1)

> N <- 100

> Z <- rnorm(N)

> X <- Z # perfect collinearity

> Y <- 4 + X + Z + rnorm(N)

> lm(Y ~ X) %>% summary()

Call:

lm(formula = Y ~ X)

Residuals:

Min 1Q Median 3Q Max

-1.8768 -0.6138 -0.1395 0.5394 2.3462

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.96231 0.09699 40.85 <2e-16 ***

X 1.99894 0.10773 18.56 <2e-16 ***

which is biased. But adjusting for Z will not work due to perfect collinearity:

lm(Y ~ X + Z) %>% summary()

Call:

lm(formula = Y ~ X + Z)

Residuals:

Min 1Q Median 3Q Max

-1.8768 -0.6138 -0.1395 0.5394 2.3462

Coefficients: (1 not defined because of singularities)

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.96231 0.09699 40.85 <2e-16 ***

X 1.99894 0.10773 18.56 <2e-16 ***

Z NA NA NA NA

So we combine X and Z into a new variable, W, and condition on W only:

> W <- X + Z

> lm(Y ~ W) %>% summary()

Call:

lm(formula = Y ~ W)

Residuals:

Min 1Q Median 3Q Max

-1.8768 -0.6138 -0.1395 0.5394 2.3462

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.96231 0.09699 40.85 <2e-16 ***

W 0.99947 0.05386 18.56 <2e-16 ***

and we obtain an unbiased estimate.

Regarding your point:

this model causes the b coefficient of x to be smaller or close to zero?

No, that should not be the case. If the correlation is high, the estimate may lose some precision, but should still be unbiased. Again we can see that with a simulation:

> nsim <- 1000

> vec.X <- numeric(nsim)

> vec.cor <- numeric(nsim)

> #

> set.seed(1)

> for (i in 1:nsim) {

+

+ Z <- rnorm(N)

+ X <- Z + rnorm(N, 0, 0.3) # high collinearity

+ vec.cor[i] <- cor(X, Z)

+ Y <- 4 + X + Z + rnorm(N)

+ m0 <- lm(Y ~ X + Z)

+ vec.X[i] <- coef(m0)[2]

+

+ }

> mean(vec.X)

[1] 1.00914

> mean(vec.cor)

[1] 0.9577407

Note that, in the first example above we knew that data generating process and because we knew that X and Z had equal influence so that a simple sum of both variables worked. However in practice we won't know the data generating process, and therefore, if we do have perfect collinearity (not likely in practice of course) then we could use the same approach as in the 2nd smulation above and add some small random error to Z which will uncover the unbiased estimate for X.

Does your approach differ is the correlation is moderate, weak?

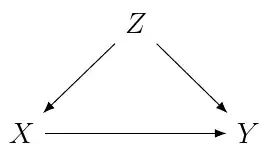

If the correlation is moderate or week there should be no problem in conditioning on Z