The normalising constant in the posterior is the marginal density of the sample in the Bayesian model.

When writing the posterior density as $$p(\theta |D) = \frac{\overbrace{p(D|\theta)}^\text{likelihood }\overbrace{p(\theta)}^\text{ prior}}{\underbrace{\int p(D|\theta)p(\theta)\,\text{d}\theta}_\text{marginal}}$$

[which unfortunately uses the same symbol $p(\cdot)$ with different meanings], this density is conditional upon $D$, with

$$\int p(D|\theta)p(\theta)\,\text{d}\theta=\mathfrak e(D)$$

being the marginal density of the sample $D$. Obviously, conditional on a realisation of $D$, $\mathfrak e(D)$ is constant, while, as $D$ varies, so does $\mathfrak e(D)$. In probabilistic terms,

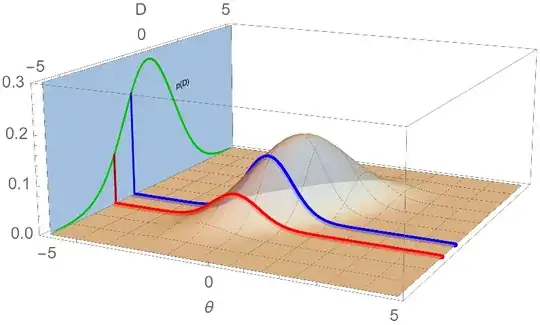

$$p(\theta|D) \mathfrak e(D) = p(D|\theta) p(\theta)$$

is the joint distribution density of the (random) pair $(\theta,D)$ in the Bayesian model [where both $D$ and $\theta$ are random variables].

The statistical meaning of $\mathfrak e(D)$ is one of "evidence" (or "prior predictive" or yet "marginal likelihood") about the assumed model $p(D|\theta)$. As nicely pointed out by Ilmari Karonen, this is the density of the sample prior to observing it and with the only information on the parameter $\theta$ provided by the prior distribution. Meaning that, the sample $D$ is obtained by first generating a parameter value $\theta$ from the prior, then generating the sample $D$ conditional on this realisation of $\theta$.

By taking the average of $p(D|\theta)$ across values of $\theta$, weighted by the prior $p(\theta)$, one produces a numerical value that can be used to compare this model [in the statistical sense of a family of parameterised distributions with unknown parameter] with other models, i.e. other families of parameterised distributions with unknown parameter. The Bayes factor is a ratio of such evidences.

For instance, if $D$ is made of a single obervation, say $x=2.13$, and if one wants to compare Model 1, a Normal (distribution) model, $X\sim \mathcal N(\theta,1)$, with $\theta$ unknown, to Model 2, an Exponential (distribution) model, $X\sim \mathcal E(\lambda)$, with $\lambda$ unknown, a Bayes factor would derive both evidences

$$\mathfrak e_1(x) = \int_{-\infty}^{+\infty} \frac{\exp\{-(x-\theta)^2/2\}}{\sqrt{2\pi}}\text{d}\pi_1(\theta)$$

and $$\mathfrak e_2(x) = \int_{0}^{+\infty} \lambda\exp\{-x\lambda\}\text{d}\pi_2(\lambda)$$

To construct such evidences, one need set both priors $\pi_1(\cdot)$ and $\pi_2(\cdot)$. For illustration sake, say

$$\pi_1(\theta)=\frac{\exp\{-\theta^2/2\}}{\sqrt{2\pi}}\quad\text{and}\quad\pi_2(\lambda)=e^{-\lambda}$$

Then

$$\mathfrak e_1(x) = \frac{\exp\{-x^2/4\}}{\sqrt{4\pi}}\quad\text{and}\quad\mathfrak e_2(x) = \frac{1}{1+x}$$

leading

$$\mathfrak e_1(2.13) = 0.091\quad\text{and}\quad\mathfrak e_2(2.13) = 0.32$$

which gives some degree of advantage to Model 2, the Exponential distribution model.