Normal data with $\mu$ and $\sigma$ both unknown. If you know that the population is $\mathsf{Norm}(\mu=0,\sigma),$ then there is no

point estimating $\mu$ by $\bar X.$ Furthermore, if you then ignore $\bar X,$ the distribution theory as a little different, using $\mathsf{Chisq}(\nu=n)$ instead of

$\mathsf{Chisq}(\nu=n-1).$

Normal data with $\mu$ known and $\sigma$ unknown. In general, for data from $\mathsf{Norm}(\mu=\mu_0,\sigma),$ with $\mu_0$ known, you have $Z_i = \frac{X_i-\mu_0}{\sigma},$ thus $Z_i^2 = \frac{(X_i-\mu_0)^2}{\sigma^2}$

$\sim \mathsf{Chis}(\nu = 1),$ and

$$\sum_{i=1}^n Z_i^2 = \sum_{i=1}^n\frac{(X_i-\mu_0)^2}{\sigma^2} \sim\mathsf{Chisq}(\nu=n).$$

Then with $\mu_0 = 0$ and $V =\frac{1}{n}\sum_{i=1}^n X_i^2,$ you have

$\frac{nV}{\sigma^2} \sim\mathsf{Chisq}(\nu=n).$

Examples. As a practical application, suppose you have $n=10$ observations from $\mathsf{Norm}(\mu=0,\sigma=5),$ you don't know either $\mu$ or $\sigma,$ and you want a 95% confidence interval for $\sigma^2.$ [Using R.]

set.seed(803)

x = rnorm(10, 0, 5)

a = mean(x); s = sd(x)

a; s

[1] -0.460746

[1] 6.010824

If you don't know either $\mu$ or $\sigma,$ then a 95% CI for $\sigma^2$ is of

the form $\left(\frac{9S^2}{U},\, \frac{9S^2}{L}\right) =$ $(17.1,120,4),$ where $L$ and $U$ cut

2.5% of the probability from the lower and upper tails, respectively, of

$\mathsf{Chisq}(9).$ The corresponding 95% CI for $\sigma$ is $(4.13, 10.97),$ which does happen to include $\sigma = 5.$

CI = 9*s^2/qchisq(c(.975,.025), 9); CI

[1] 17.09373 120.41598

sqrt(CI)

[1] 4.134456 10.973422

However, if you know that $\mu = 0$ and want a 95% CI for $\sigma^2,$ then the

CI is of the form $\left(\frac{10V}{U},\, \frac{10V}{L}\right) =$ $(16.0,100.8),$ where $L$ and $U$ cut

2.5% of the probability from the lower and upper tails, respectively, of

$\mathsf{Chisq}(10).$ The corresponding 95% CI for $\sigma$ is $(4.00, 10.04).$

Notice that knowing $\mu=0$ provides relevant information, so that this CI is

a little shorter than the one for unknown $\mu.$

v = sum(x^2)/10; c

[1] 7.814728

CI = 10*v/qchisq(c(.975,.025), 10); CI

[1] 15.97862 100.79941

sqrt(CI)

[1] 3.997327 10.039891

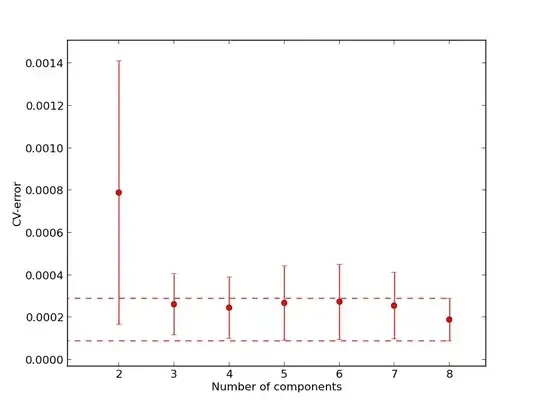

Simulations. In the upper plot below, the the density function for $\mathsf{Chisq}(9)$ fits then histogram for simulated values of $9S^2/\sigma^2.$ In the lower plot, the density of $\mathsf{Chisq}(10)$

fits the histogram for simulated values of $10V/\sigma^2,$ but the

density of $\mathsf{Chisq}(9)$ does not. [R code below figure.]

set.seed(2020)

s = replicate(10^5, sd(rnorm(10,0,5)))

q.9 = 9*s^2/25

v = replicate(10^5, mean(rnorm(10,0,5)^2))

q.10 = 10*v/25

mx = max(q.10,q.9)

par(mfrow=c(2,1))

hist(q.9, prob=T, br=30, xlim=c(0,mx), col="skyblue", main="CHISQ(9)")

curve(dchisq(x,9), add=T, lwd=2)

hist(q.10, prob=T, br=30, xlim=c(0,mx), col="skyblue", main="CHISQ(10)")

curve(dchisq(x,10), add=T, lwd=2)

curve(dchisq(x,9), add=T, col="red", lwd=3, lty="dotted")

par(mfrow=c(1,1))