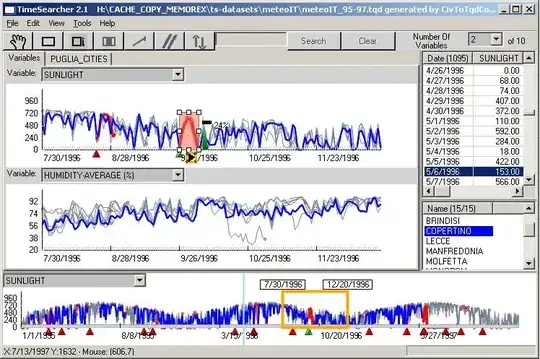

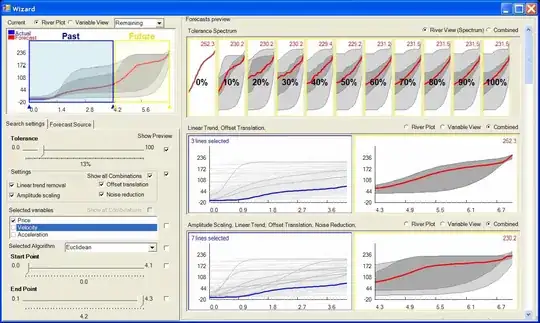

I often deal with reasonable sized amount of time series data, 50-200 million doubles with associated time stamps and would like to visualize them dynamically.

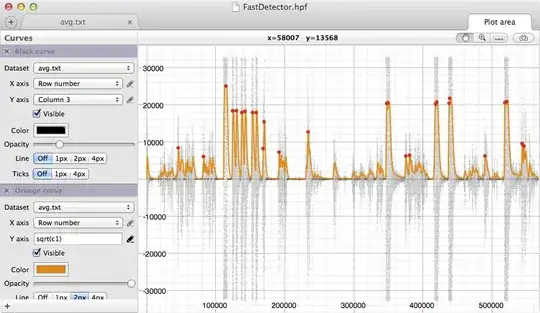

Is there existing software to do this effectively? How about libraries and data formats? Zoom-cache is one example of library focusing on large time series. In Zoom-cache the data is summarized at several resolutions to make it easier to view at different resolutions.

Edit: Also if there is anywhere else I should ask this question or seek an answer please let me know.